Copyright

This document as well as the accompanying code, Jupyter Notebooks, and other materials on the Quant Platform (http://pqp.io) and Github are copyrighted and only intended for personal use. Any kind of sharing, distribution, duplication, commercial use, etc. without written permission by Dr. Yves J. Hilpisch is prohibited.

The contents, Python code, Jupyter Notebooks, and other materials of this book come without warranties or representations, to the extent permitted by applicable law.

Notice that this document is work in progress and that substantial additions, changes, updates, etc. will take place over time. It is advised to regularly check for new versions of the document.

(c) Dr. Yves J. Hilpisch, August 2024

Preface

Tell me and I forget. Teach me and I remember. Involve me and I learn.

Why this Book?

Reinforcement learning (RL) has enabled a number of breakthroughs in artificial intelligence. One of the key algorithms in RL is deep Q-learning (DQL) that can be applied to a large number of dynamic decision problems. Popular examples are arcade games and board games, such as Go, for which RL and DQL algorithms have achieved superhuman performance in many instances. This has often happened despite the beliefs of experts that such feats would be impossible for decades to come.

Finance is a discipline with a strong connection between theory and practice. Theoretical advancements often find their way quickly into the applied domain. Many problems in finance are dynamic decision problems, such as the optimal allocation of assets over time. Therefore it is, on the one hand, theoretically interesting to apply DQL to financial problems. On the other hand, it is also in general quite easy and straightforward to apply such algorithms — usually after some thorough testing — in the financial markets.

In recent years, financial research has seen a strong growth in publications related to RL, DQL, and related methods applied to finance. However, there is hardly any resource in book form — beyond the purely theoretical ones — for those who are looking for an applied introduction to this exciting field. This book closes the gap in that it provides the required background in a concise fashion and otherwise focuses on the implementation of the algorithms in the form of self-contained Python code and the application to important financial problems.

Target Audience

This book is intended as a concise, Python-based introduction to the major ideas and elements of RL and DQL as applied to finance. It should be useful to both students and academics as well as to practitioners in search of alternatives to existing financial theories and algorithms. The book expects basic knowledge of the Python programming language, object-oriented programming, and the major Python packages used in data science and machine learning, such as NumPy, pandas, matplotlib, scikit-learn, and TensorFlow.

Overview of the Book

The book consists of the following chapters:

- Learning through Interaction

-

The first chapter focuses on learning through interaction with four major examples: probability matching, Bayesian updating, reinforcement learning (RL), and deep-Q-learning (DQL).

- Deep Q-Learning

-

The second chapter introduces concepts from dynamic programming (DP) and discusses DQL as an approach to approximate solutions to DP problems. The major theme is the derivation of optimal policies to maximize a given objective function through taking a sequence of actions and updating the optimal policy iteratively. DQL is illustrated on the basis of a DQL agent that learns to play the

CartPolegame from thegymnasiumPython package. - Financial Q-Learning

-

The third chapter develops a first finance environment that allows the DQL agent from Deep Q-Learning to learn a financial prediction game. Although the environment formally replicates the API of the

CartPoleit misses yet some important characteristics to apply RL successfully. - Simulated Data

-

The fourth chapter is about data augmentation based on Monte Carlo simulation approaches and discusses the addition of noise to historical data and the simulation of stochastic processes.

- Generated Data

-

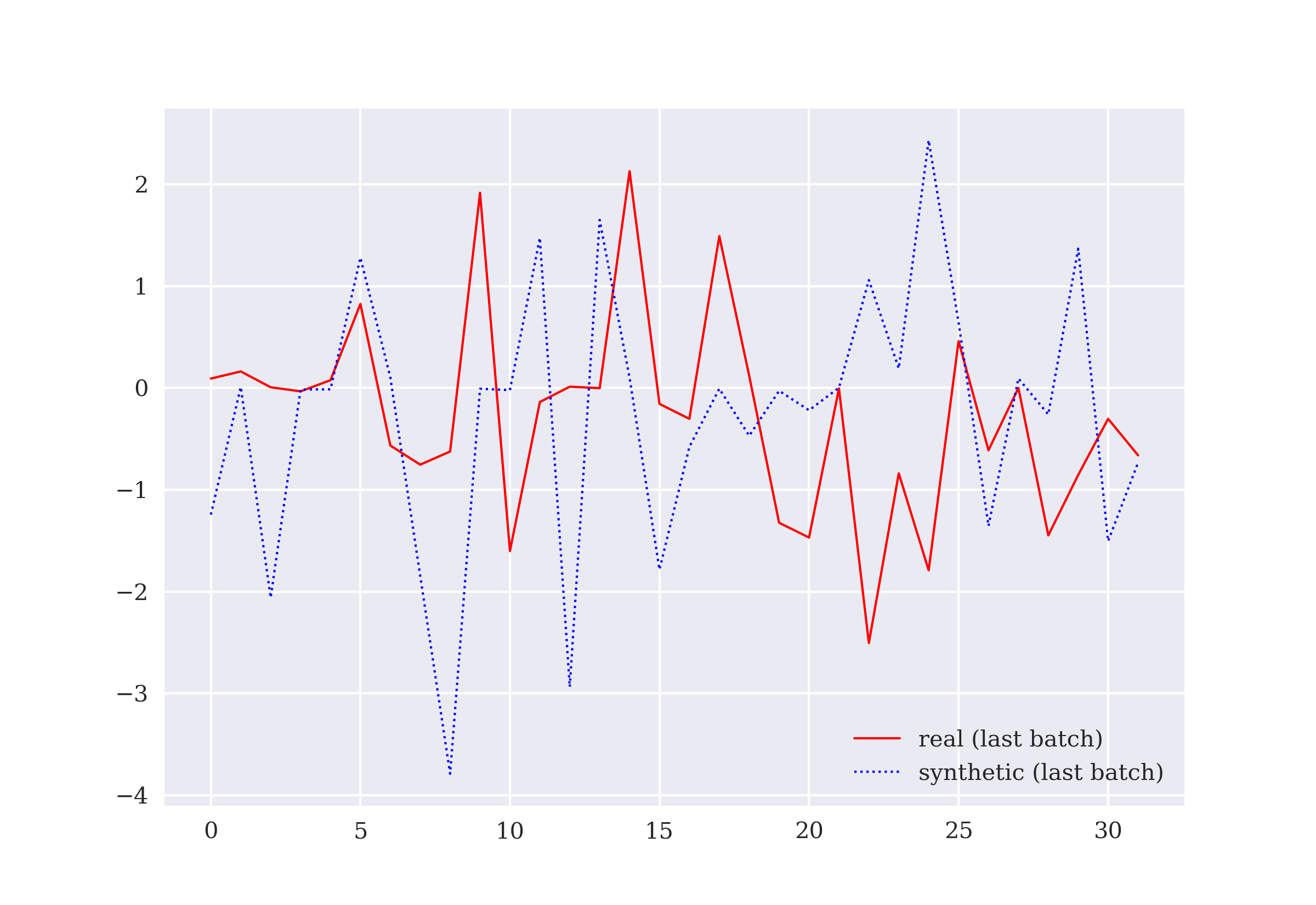

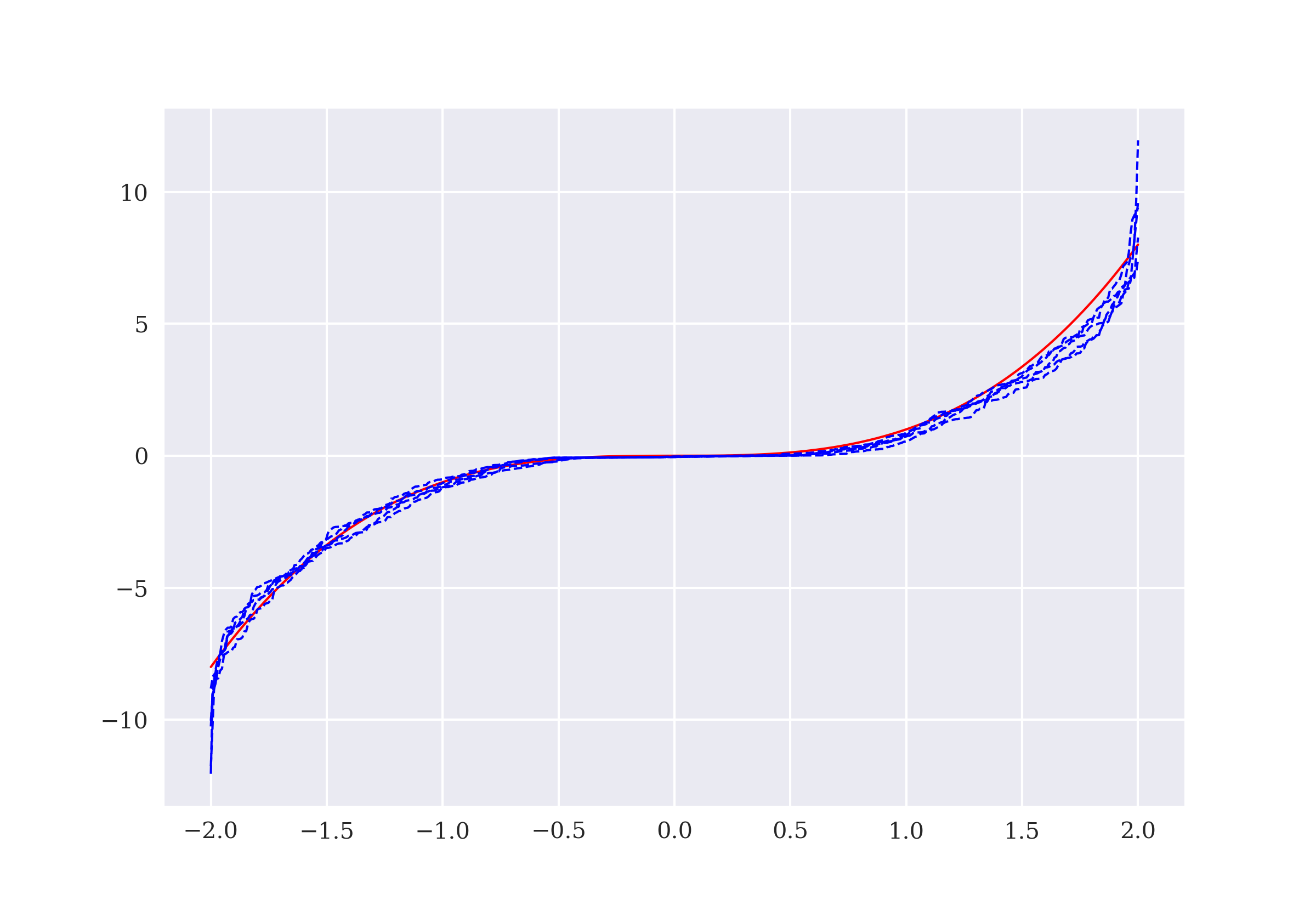

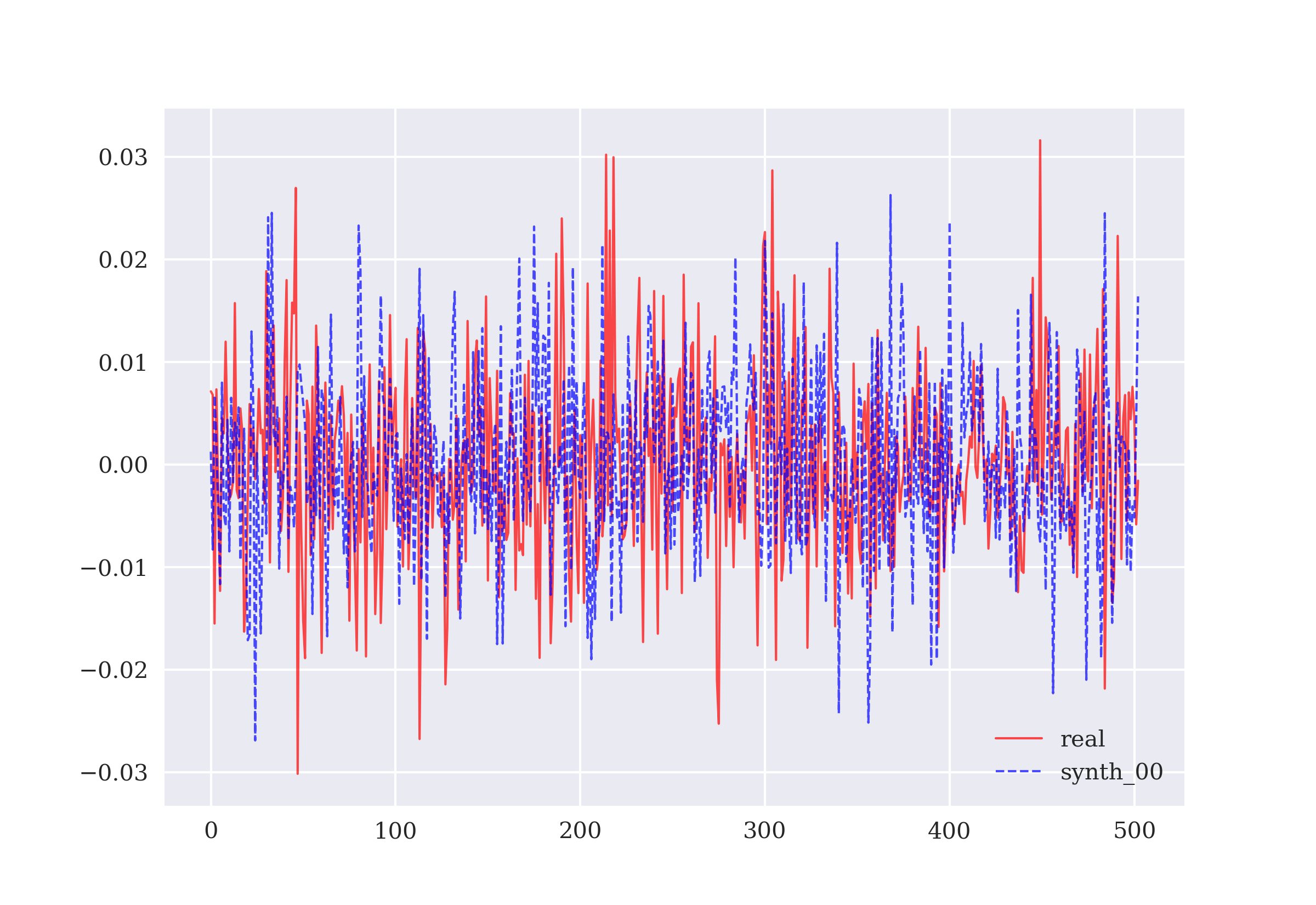

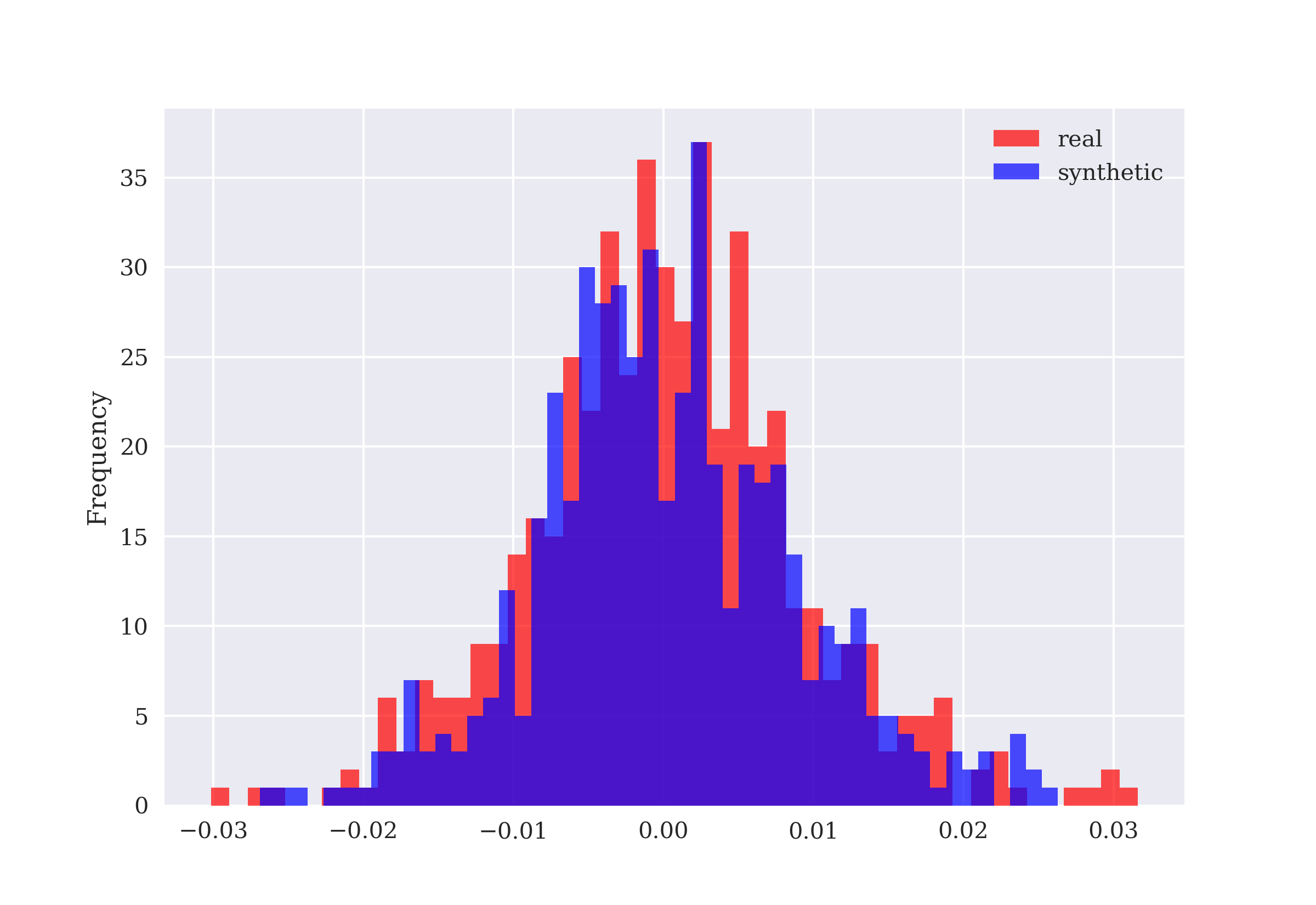

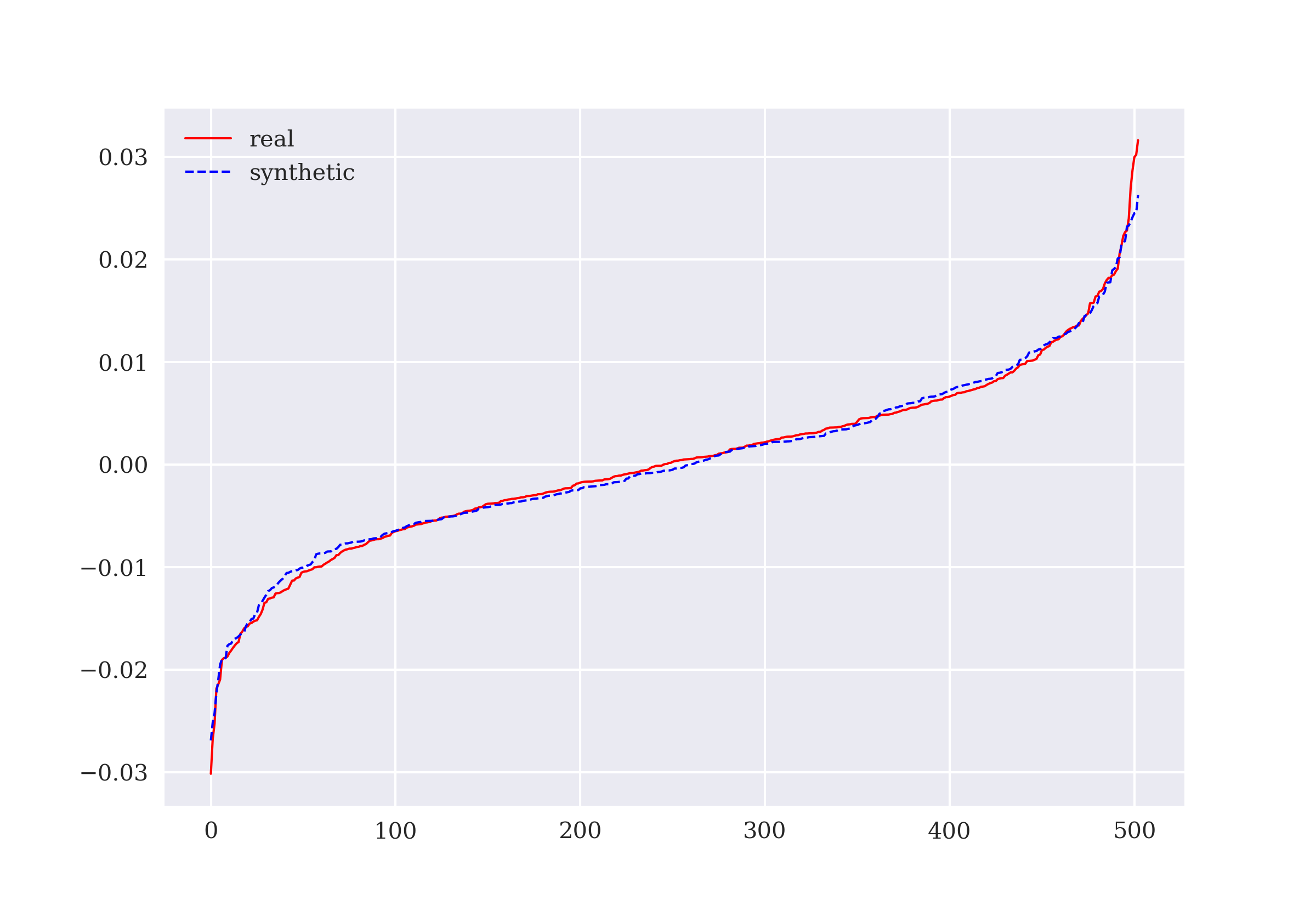

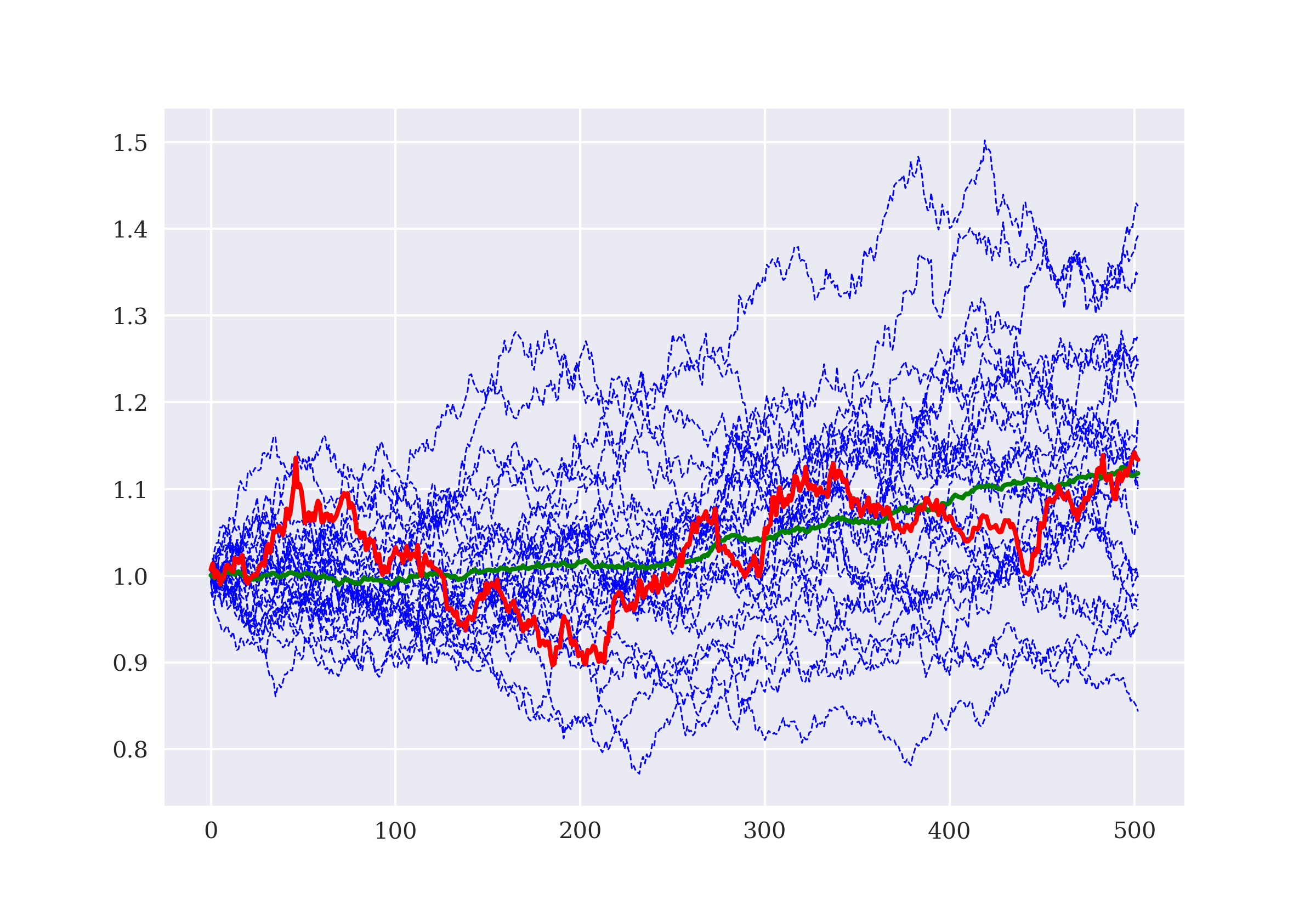

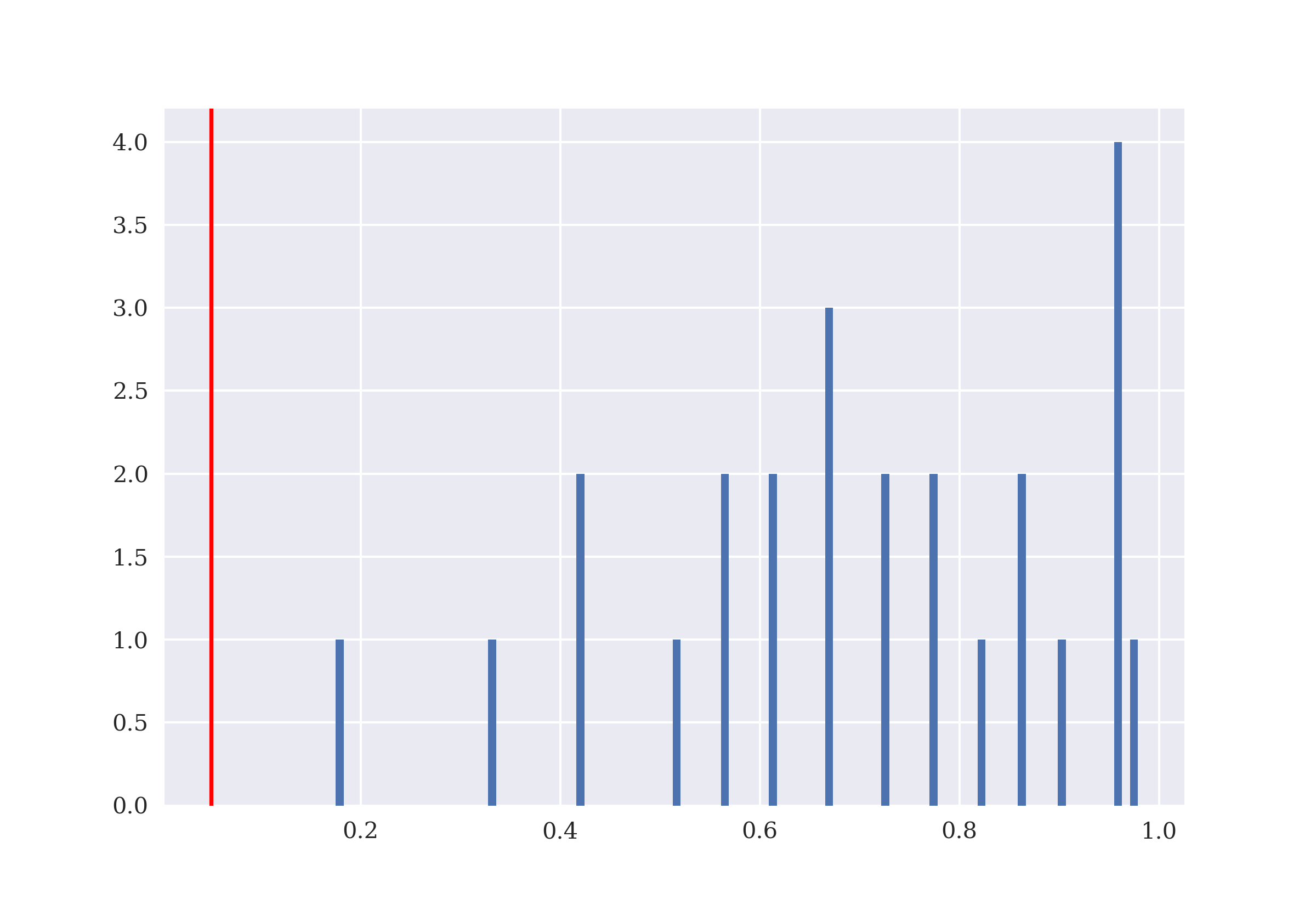

The fifth chapter introduces generative adversarial networks (GANs) to synthetically generate time series data that has similar statistical characteristics as historical time series data on which a GAN was trained on.

- Algorithmic Trading

-

Building on the example from Financial Q-Learning, this chapter applies DQL to the problem of algorithmic trading based on the prediction of the next price movement’s direction.

- Dynamic Hedging

-

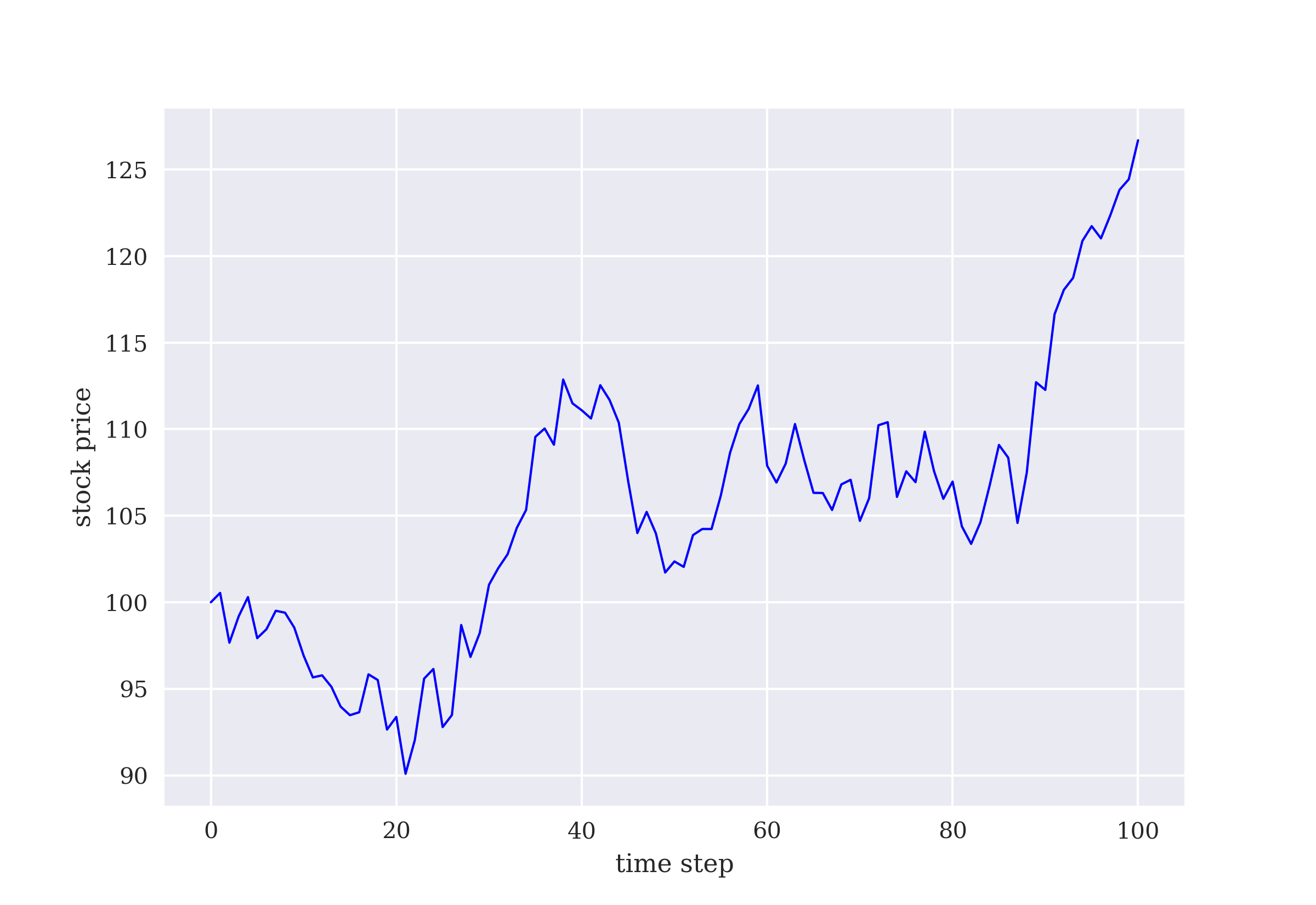

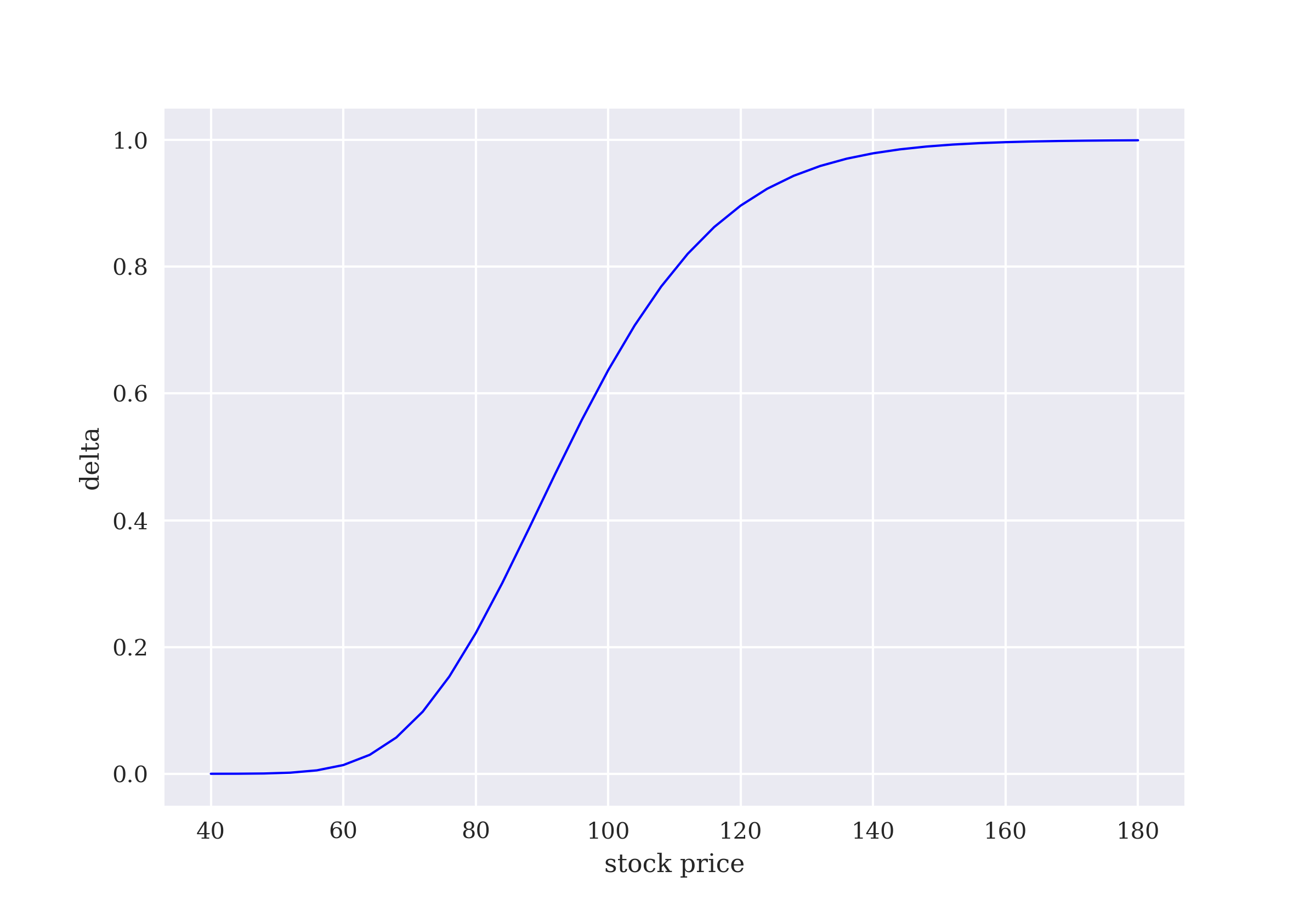

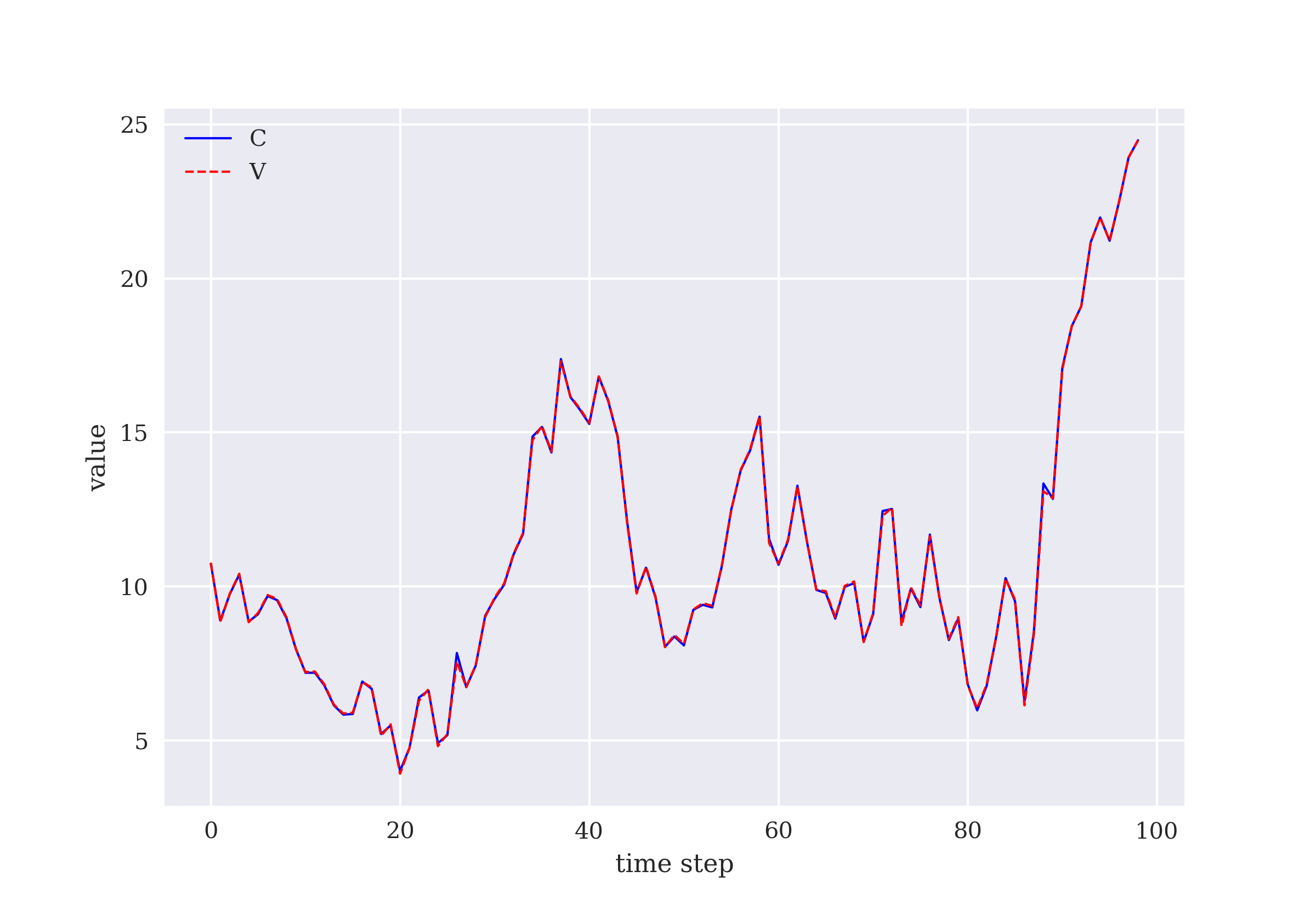

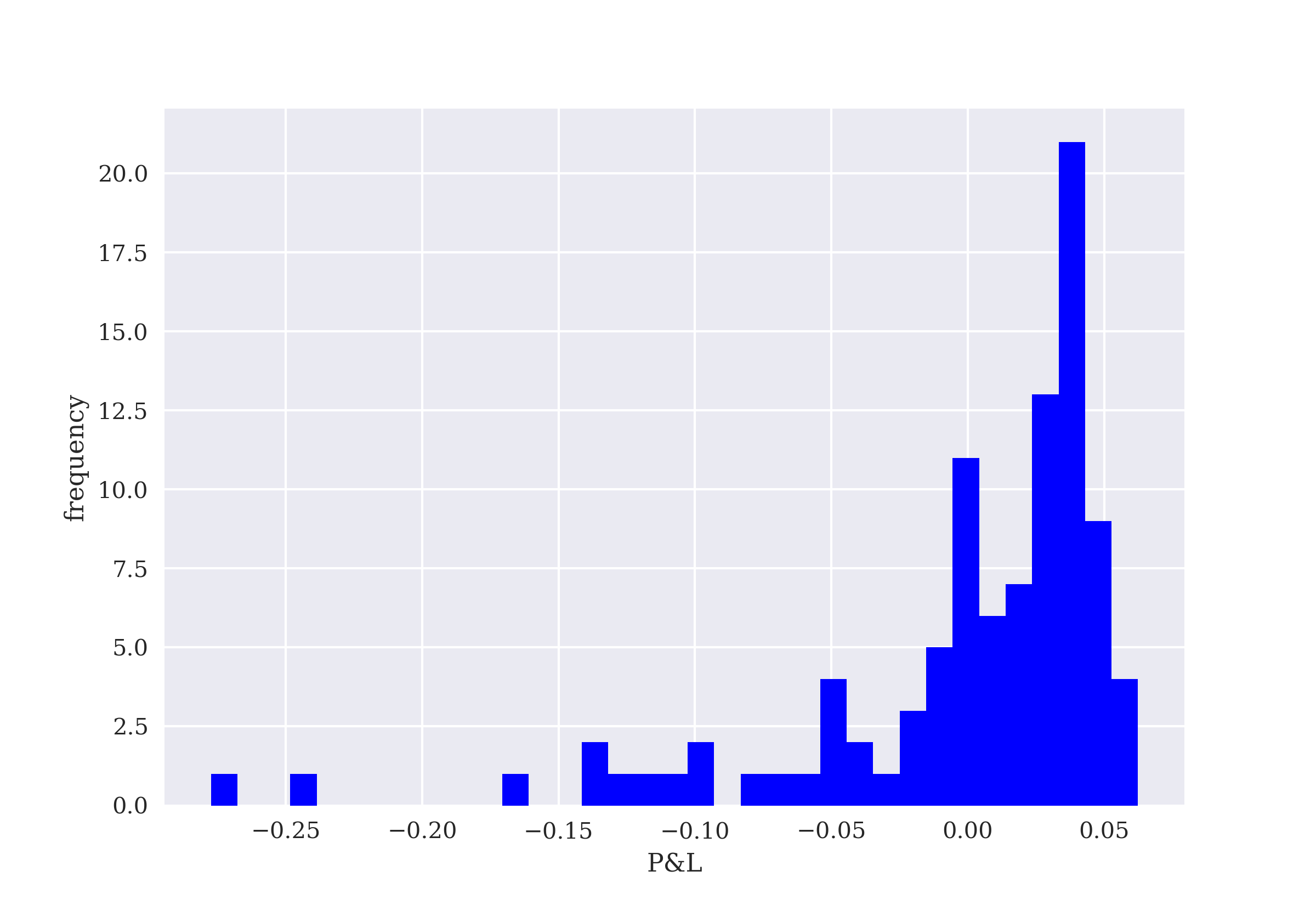

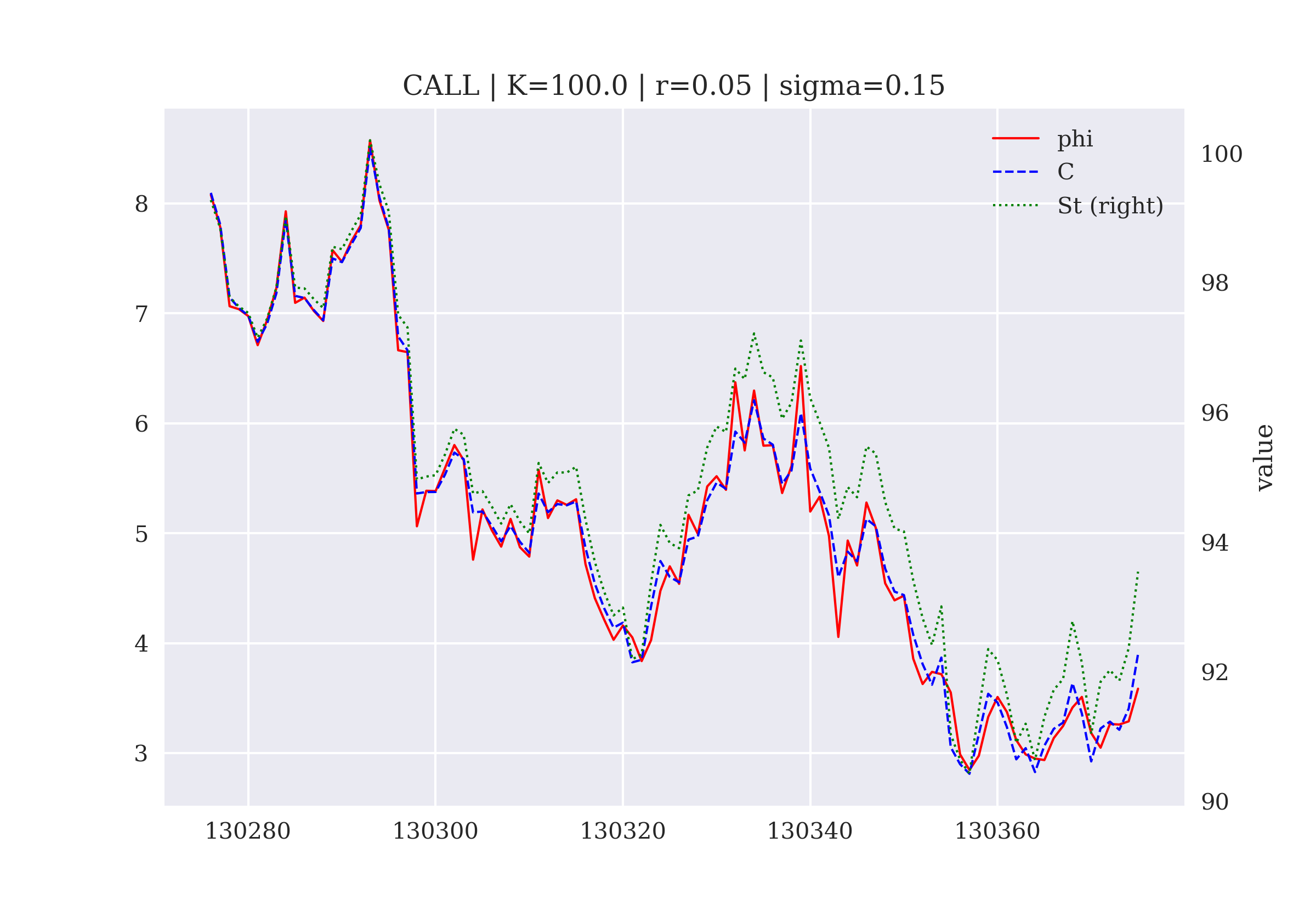

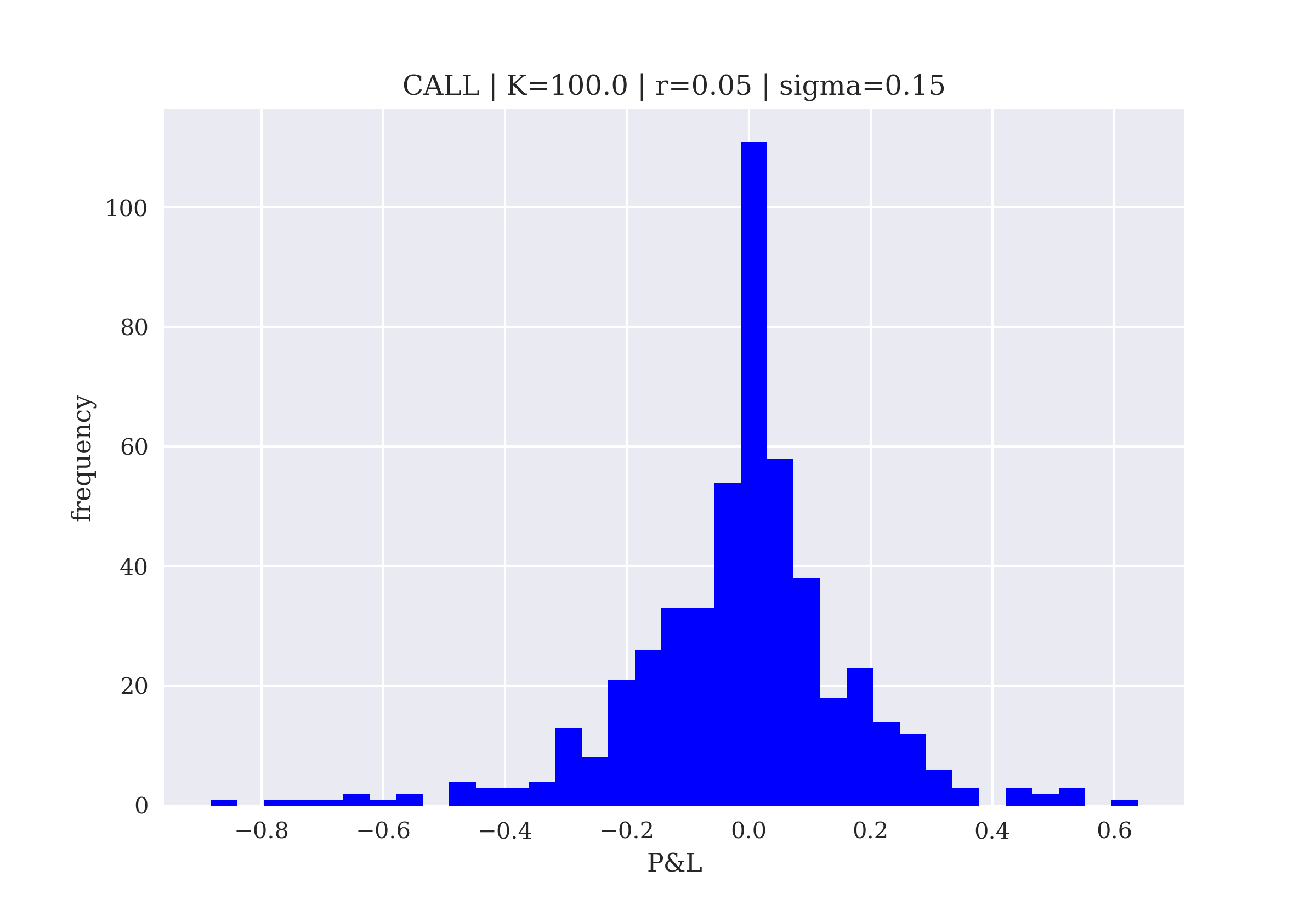

The seventh chapter is about the learning of optimal dynamic hedging strategies for an option with European exercise in the Black-Scholes-Merton (1973) model. In other words, delta hedging or dynamic replication of the option is the goal.

- Dynamic Asset Allocation

-

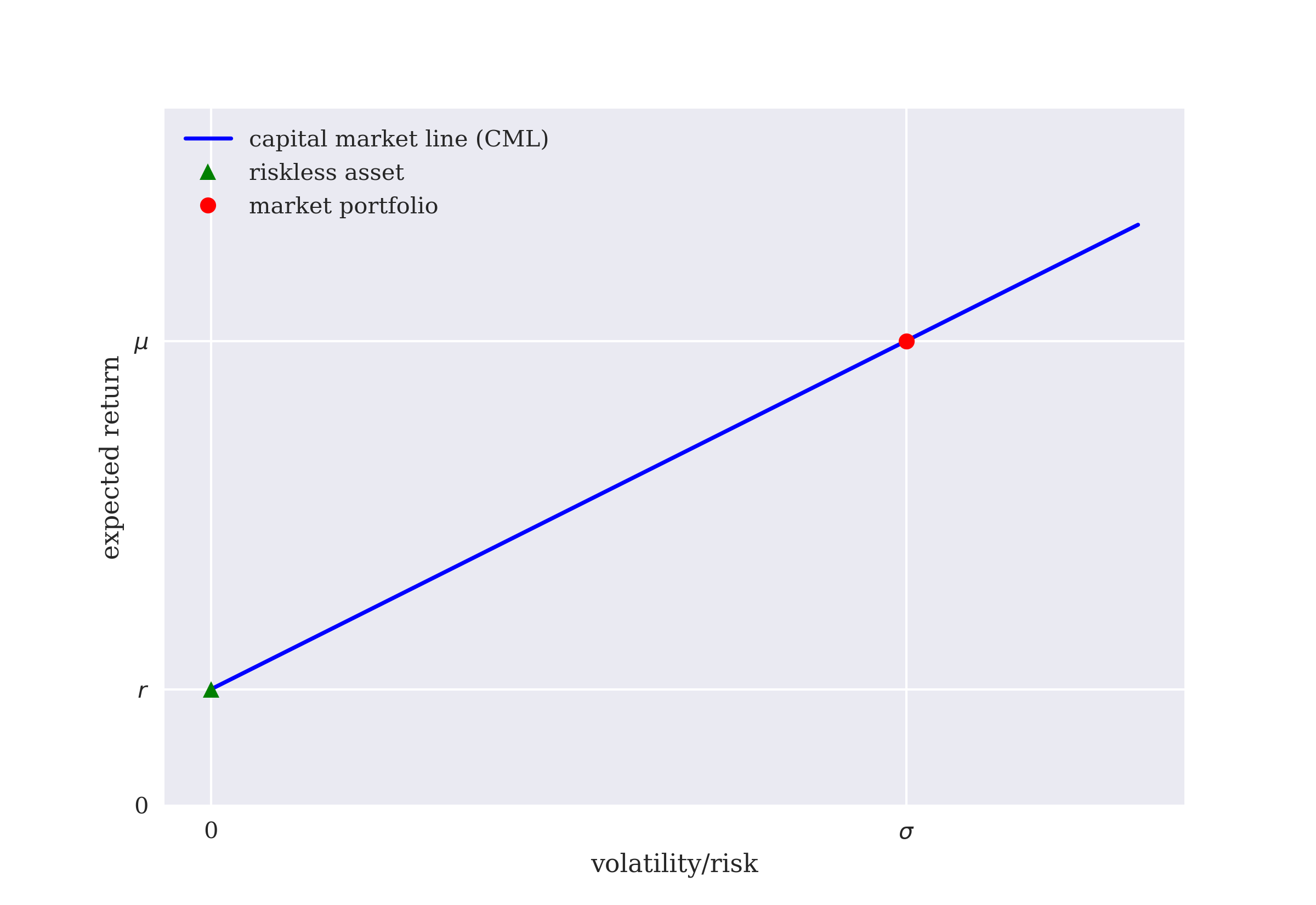

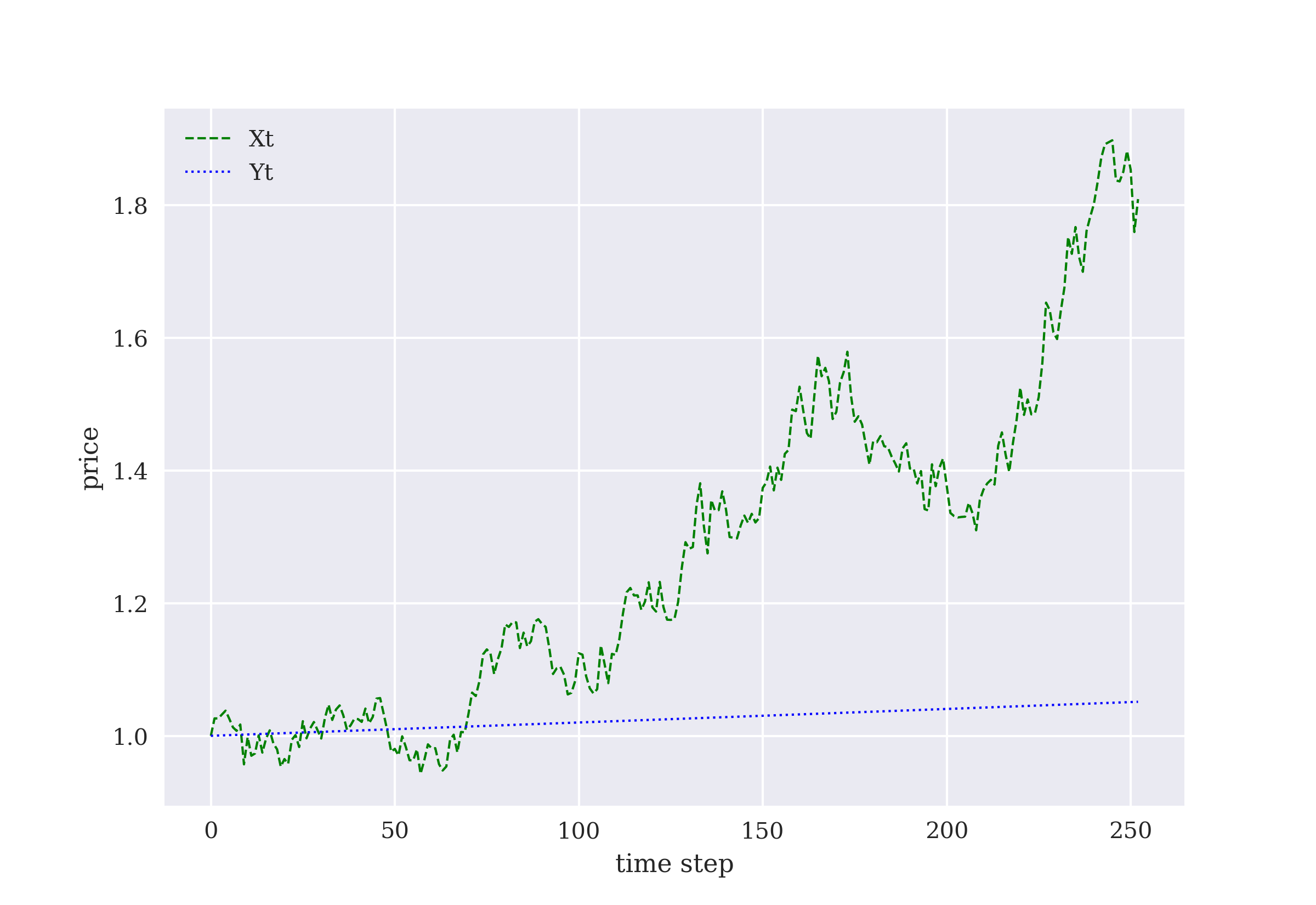

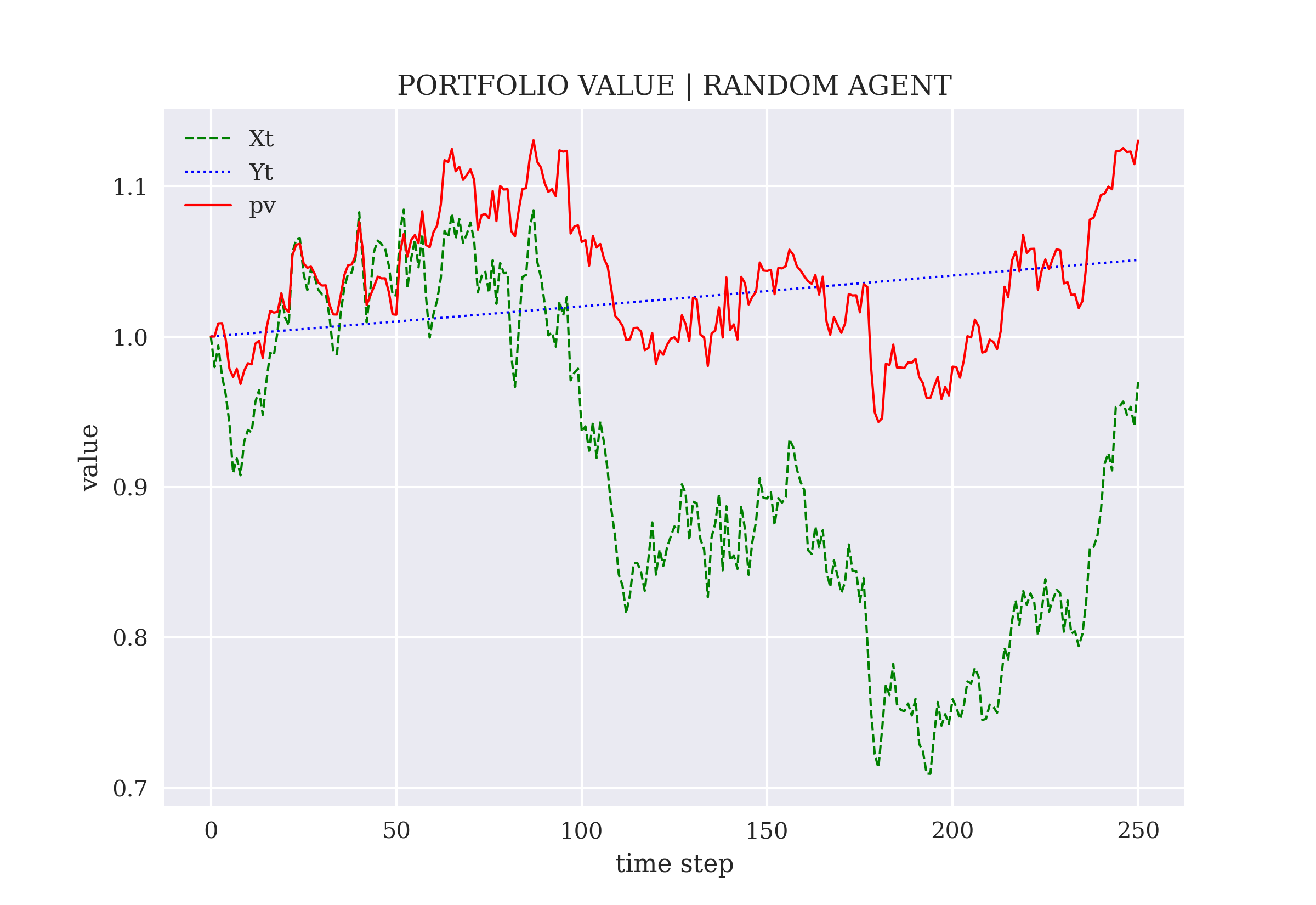

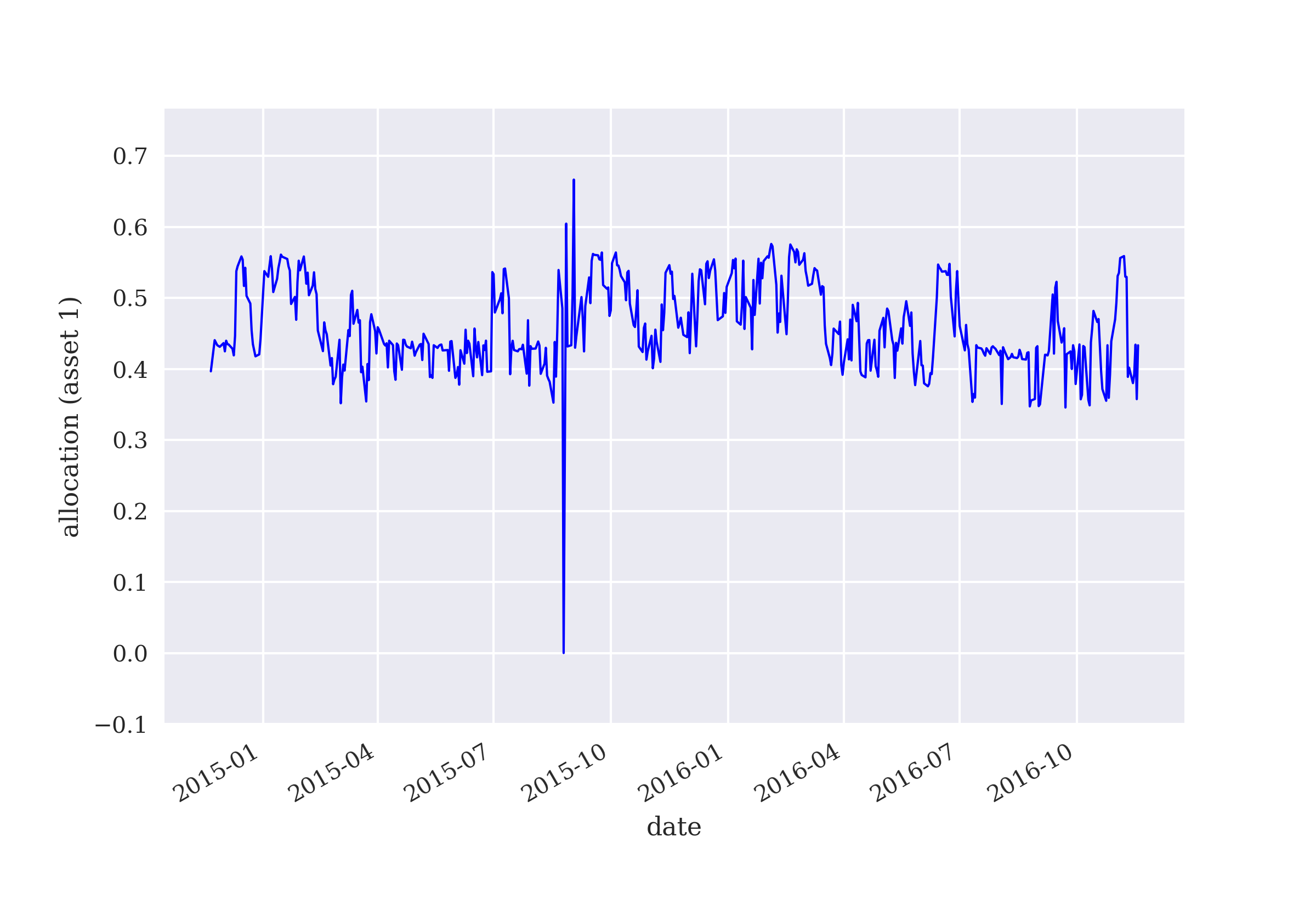

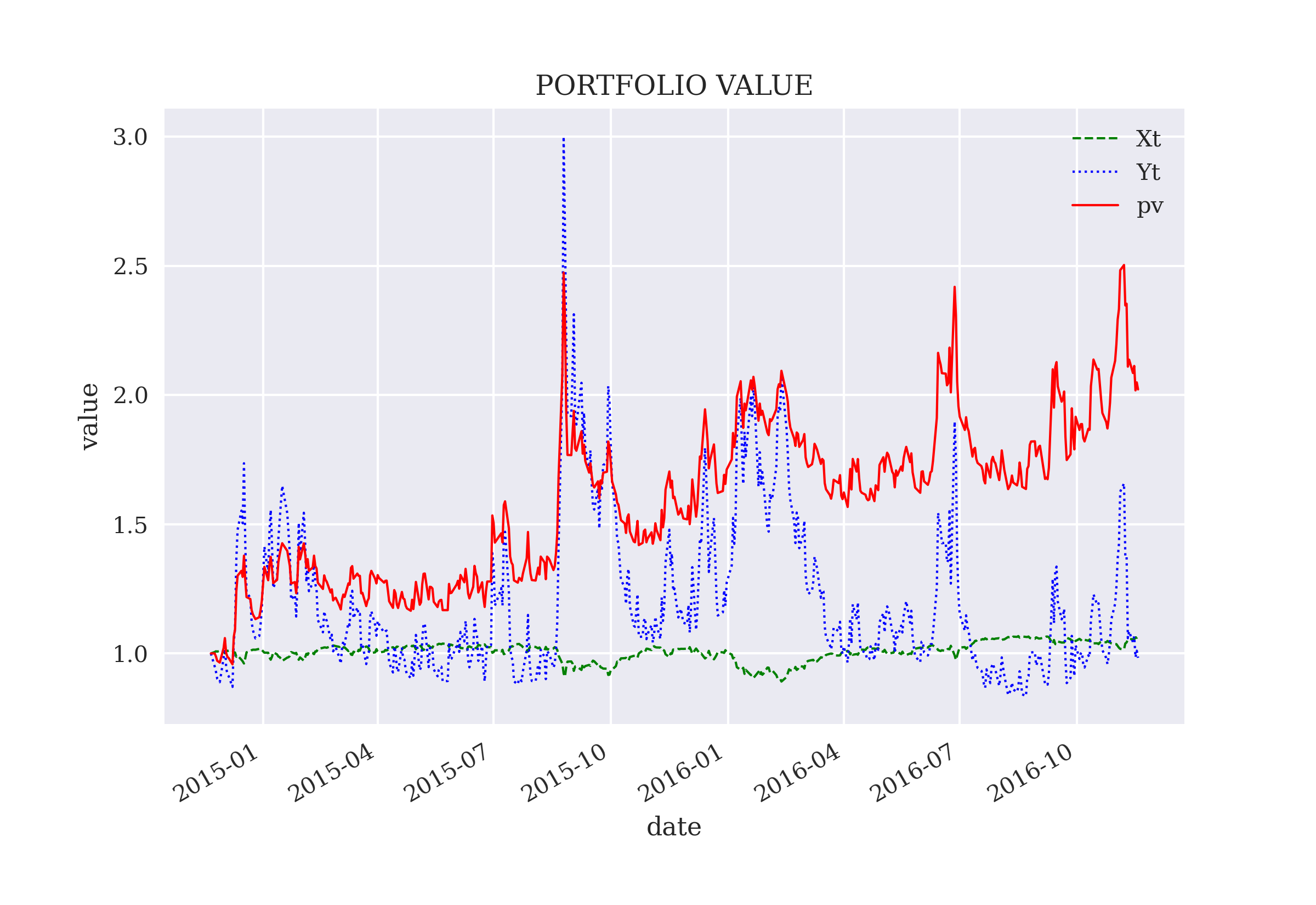

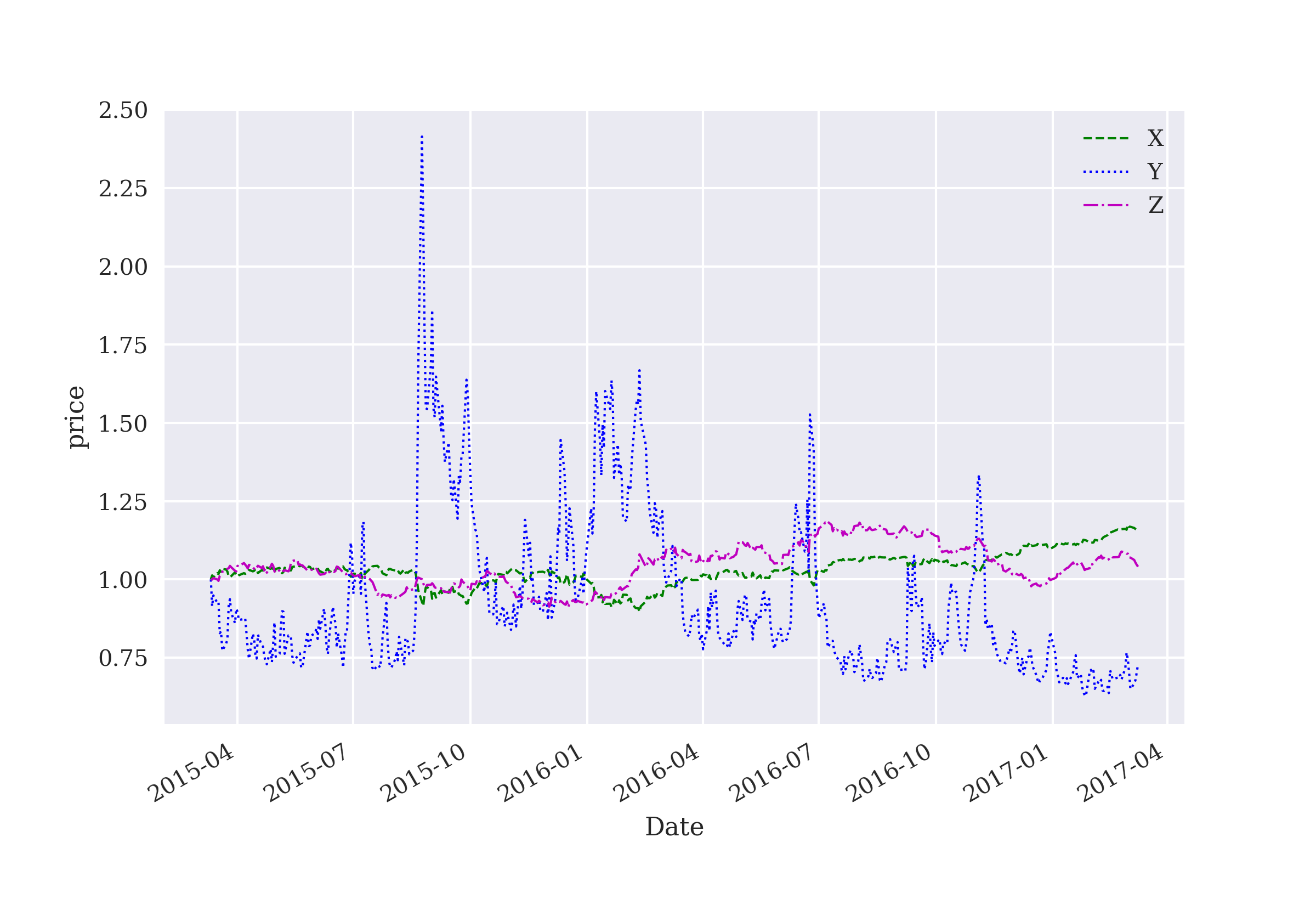

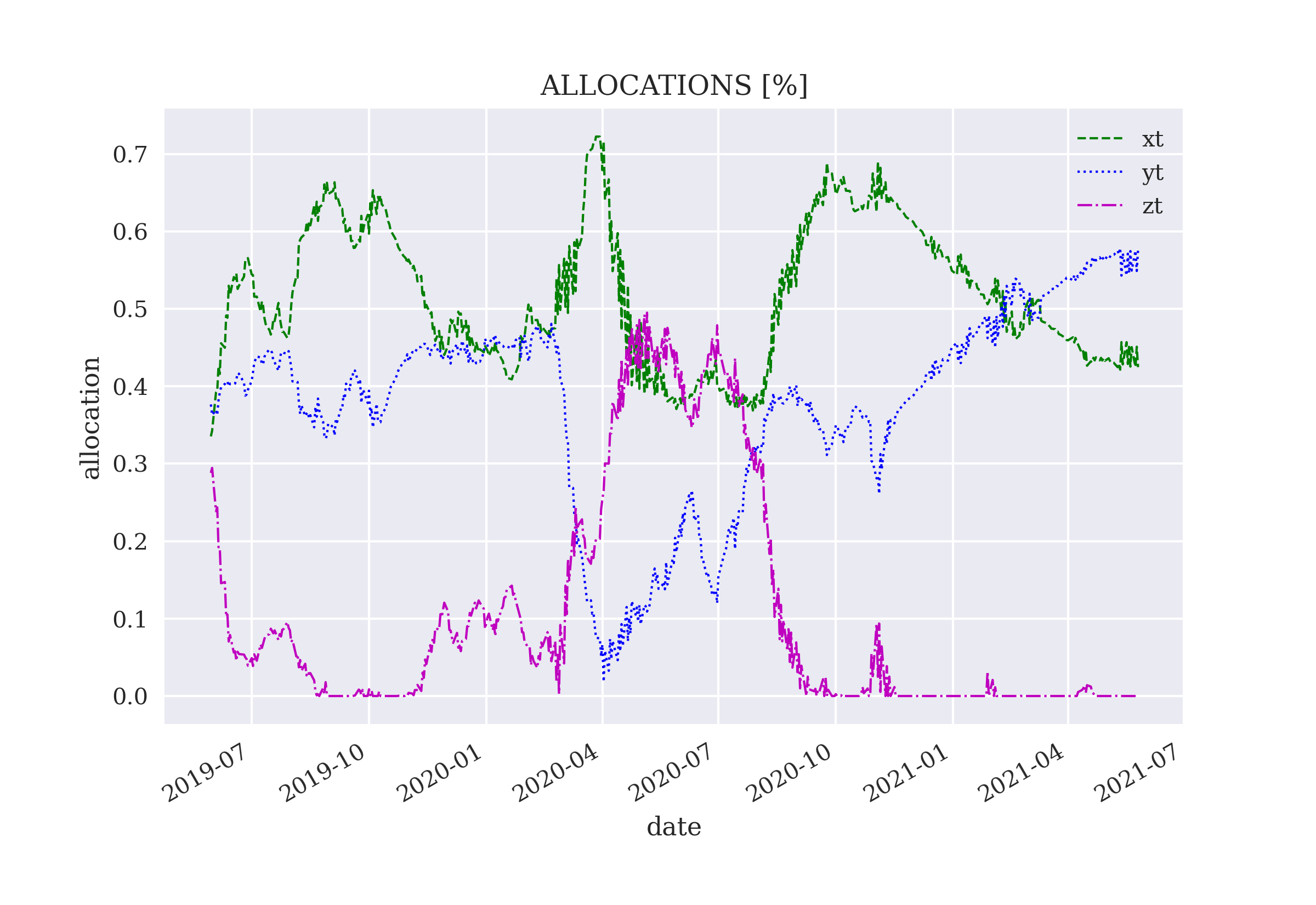

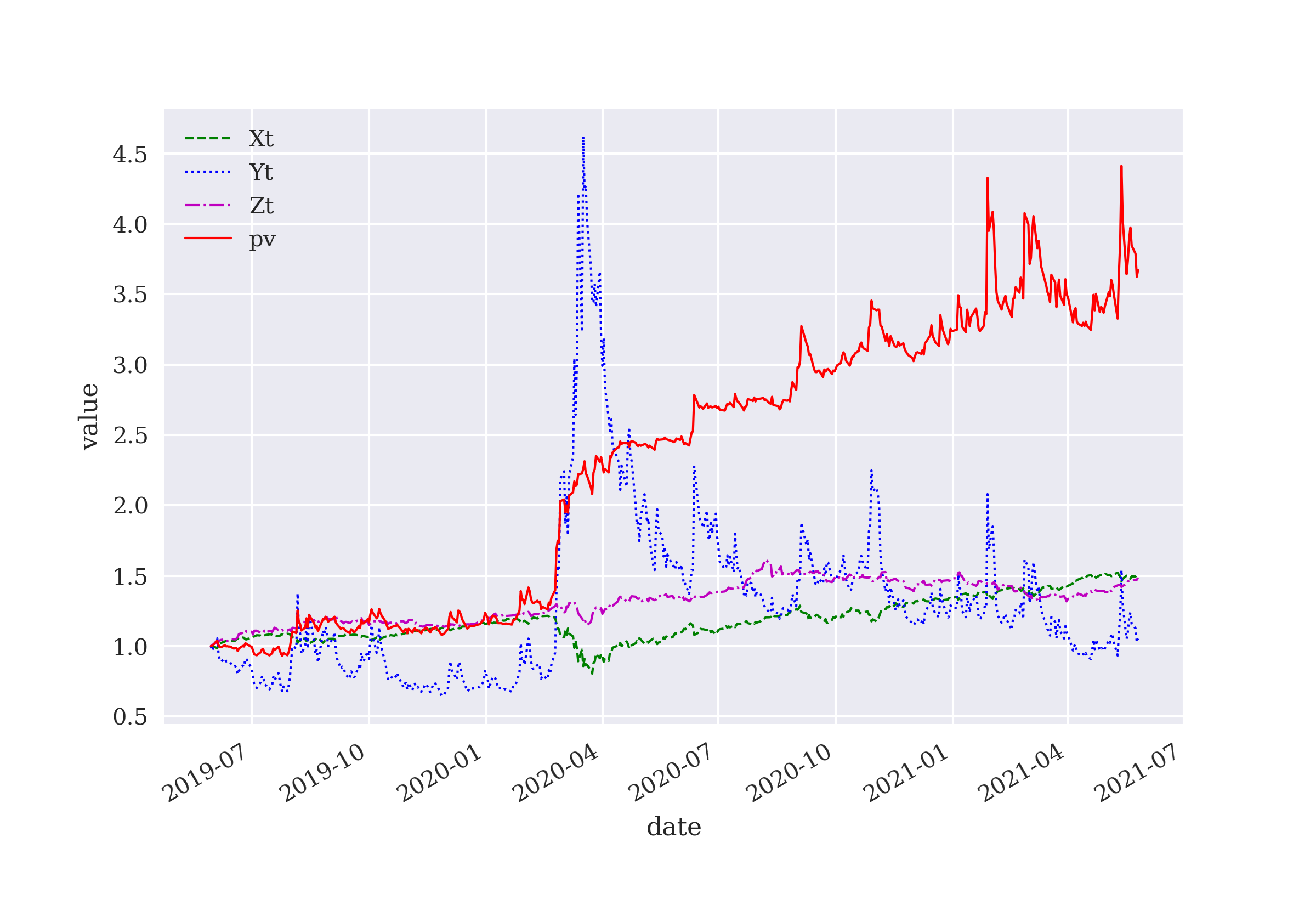

This chapter applies DQL to three canonical examples in asset management: one risky asset and one risk-less asset, two risky assets, and three risky assets. The problem is to dynamically allocate funds to the available assets to maximize a profit target or a risk-adjusted return (Sharpe ratio).

- Optimal Execution

-

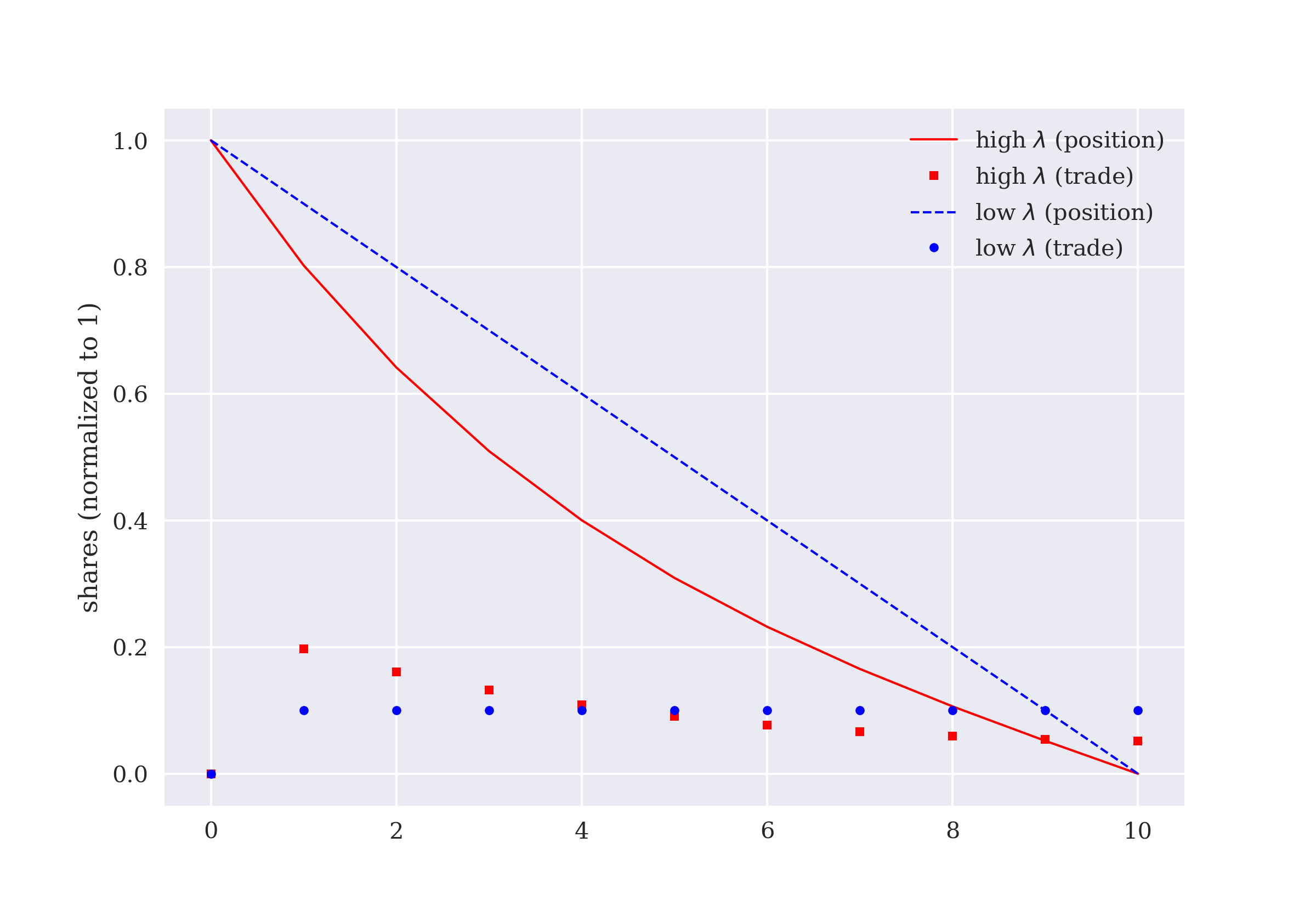

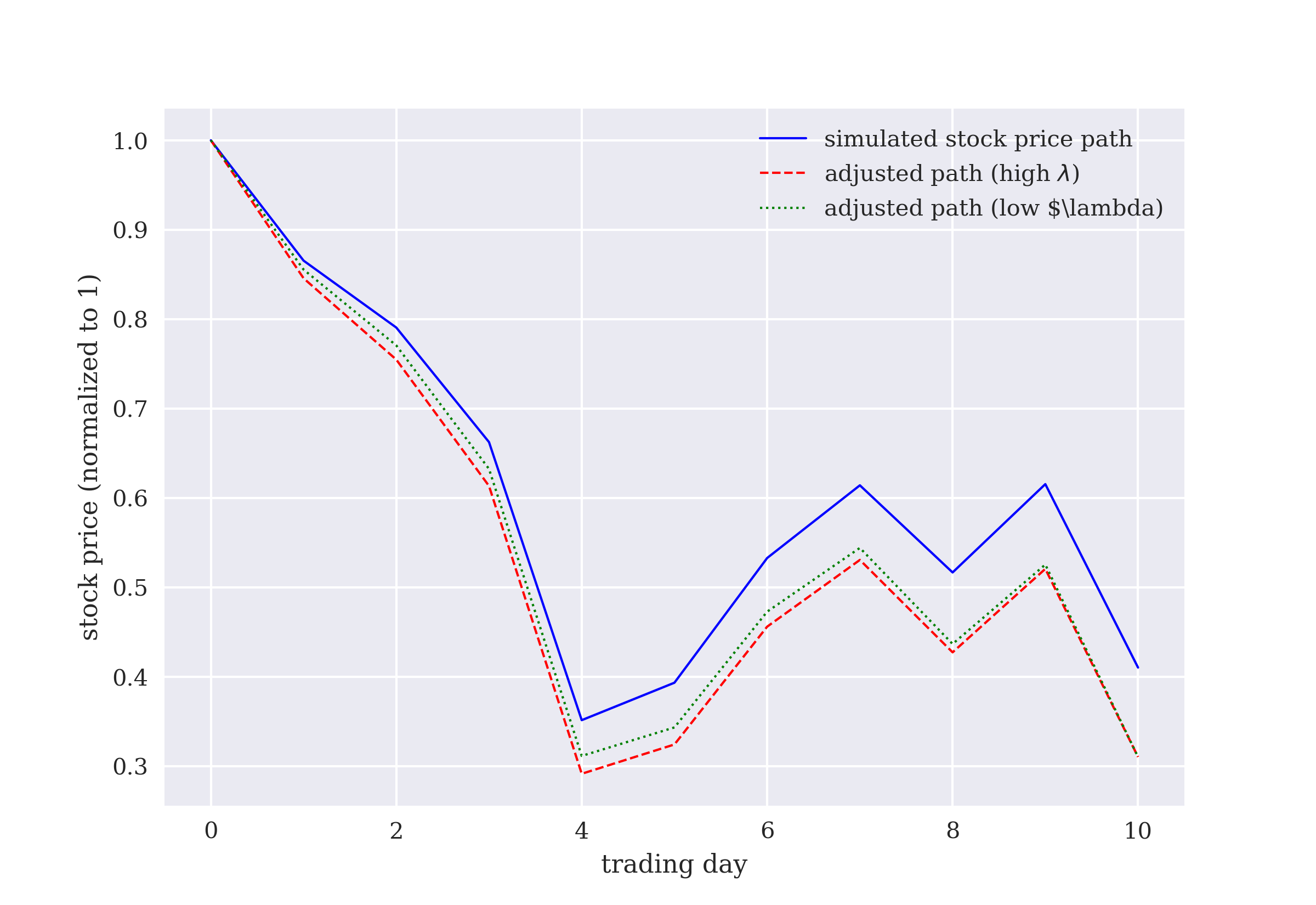

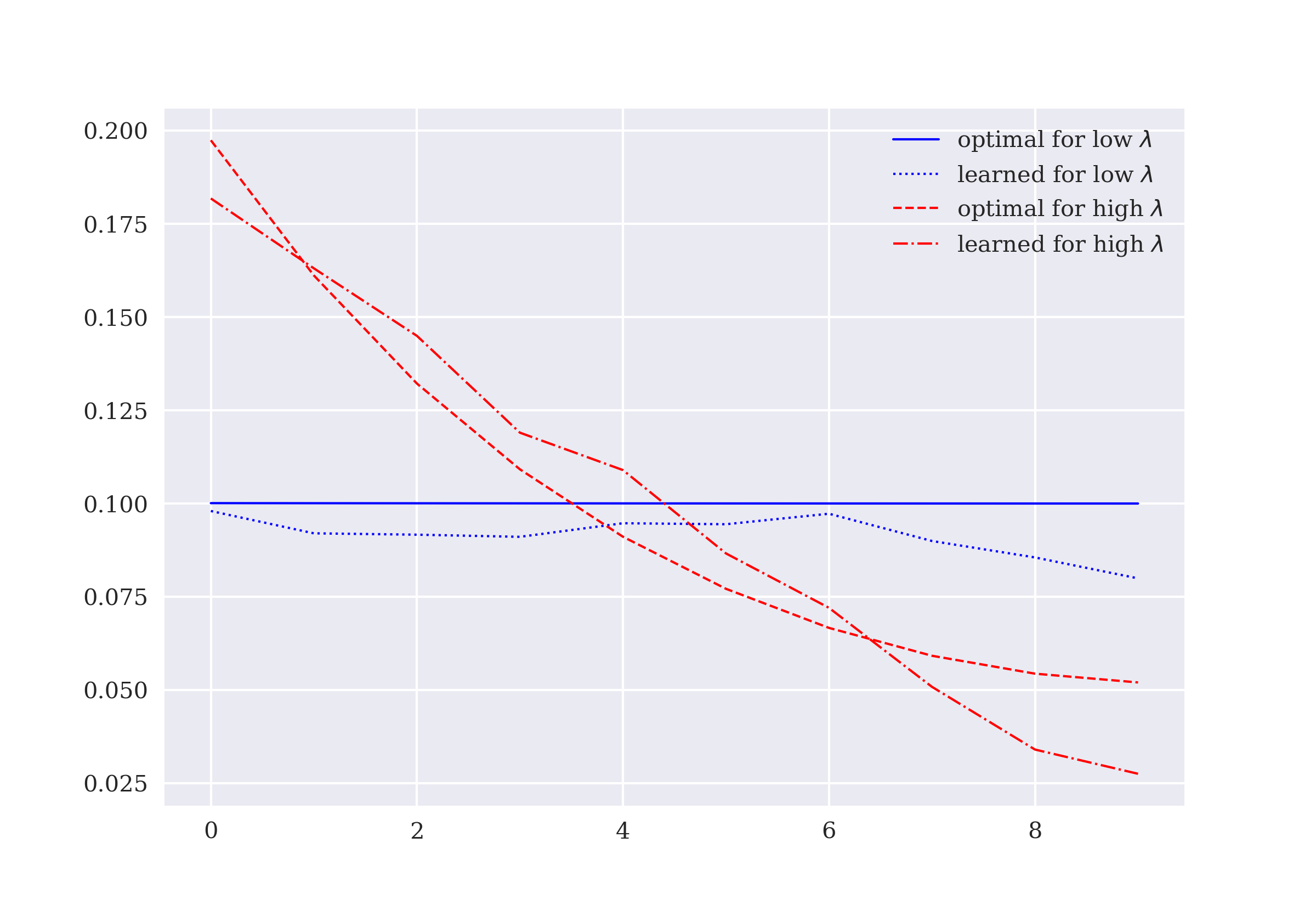

The tenth chapter is about the optimal liquidation of a large position in a stock. Given a certain risk aversion, the total execution costs are to be minimized. This use case differs from the others in that all actions are tightly connected with each other through an additional constraint. The chapter also introduces an additional RL algorithm in the form of an actor-critic implementation.

- Concluding Remarks

-

The final chapter of the book provides some concluding remarks and sketches out how the examples presented in the book can be improved upon.

The Basics

The first part of the book covers the basics of reinforcement learning and provides background information. It consists of three chapters:

-

Learning through Interaction focuses on learning through interaction with four major examples: probability matching, Bayesian updating, reinforcement learning (RL), and deep-Q-learning (DQL).

-

Deep Q-Learning introduces concepts from dynamic programming (DP) and discusses DQL as an approach to approximate solutions to DP problems. The major theme is the derivation of optimal policies to maximize a given objective function through taking a sequence of actions and updating the optimal policy iteratively. DQL is illustrated based on the

CartPolegame from thegymnasiumPython package. -

Financial Q-Learning develops a first finance environment that allows the DQL agent from Deep Q-Learning to learn a financial prediction game. Although the environment formally replicates the application programming interface (API) of the

CartPole, it misses yet some important characteristics to apply RL successfully.

1. Learning through Interaction

The idea that we learn by interacting with our environment is probably the first to occur to us when we think about the nature of learning.

For human beings and animals alike, learning is almost as fundamental as breathing. It is something that happens continuously and most often unconsciously. There are different forms of learning. The one most important to the topics covered in this book is based on interacting with an environment.

Interaction with an environment provides the learner — or agent henceforth — with feedback that can be used to update their knowledge or to refine a skill. In this book, we are mostly interested in learning quantifiable facts about an environment, such as the odds of winning a bet or the reward that an action yields.

Bayesian Learning discusses Bayesian learning as an example of learning through interaction. Reinforcement Learning presents breakthroughs in artificial intelligence that were made possible through reinforcement learning. It also describes the major building blocks of reinforcement learning. Deep Q-Learning explains the two major characteristics of deep Q-learning which is the most important algorithm for the remainder of the book.

1.1. Bayesian Learning

Two simple examples can illustrate learning by interacting with an environment: tossing a biased coin and rolling a biased die. The examples are based on the idea that an agent betting repeatedly on the outcome of a biased gamble — and remembering all outcomes — can learn bet-by-bet about a gamble’s bias and therewith about the optimal policy for betting. The idea, in that sense, makes use of Bayesian updating. Bayes theorem and Bayesian updating date back to the 18th century (see Bayes and Price (1763)). A modern and Python-based discussion of Bayesian statistics is found in Downey (2021).

1.1.1. Tossing a Biased Coin

Assume the simple game of betting on the outcome of tossing a biased coin. As a benchmark, consider the special case of an unbiased coin first. Agents are allowed to bet for free on the outcome of the coin tosses. An agent might, for example, bet randomly on either heads or tails. If the agent wins, the reward is 1 USD and nothing otherwise. The agent’s goal is to maximize the total reward. The following Python code simulates several sequences of 100 bets each:

In [1]: import numpy as np

from numpy.random import default_rng

rng = default_rng(seed=100)

In [2]: ssp = [1, 0] (1)

In [3]: asp = [1, 0] (2)

In [4]: def epoch():

tr = 0

for _ in range(100):

a = rng.choice(asp) (3)

s = rng.choice(ssp) (4)

if a == s:

tr += 1 (5)

return tr

In [5]: rl = np.array([epoch() for _ in range(250)]) (6)

rl[:10]

Out[5]: array([56, 47, 48, 55, 55, 51, 54, 43, 55, 40])

In [6]: rl.mean() (7)

Out[6]: 49.968| 1 | The state space, 1 for heads and 0 for tails. |

| 2 | The action space, 1 for a bet on heads and 0 for one on tails. |

| 3 | The random bet. |

| 4 | The random coin toss. |

| 5 | The reward for a winning bet. |

| 6 | The simulation of multiple sequences of bets. |

| 7 | The average total reward. |

The average total reward in this benchmark case is close to 50. The same result might be achieved by solely betting on either heads or tails.

Assume now that the coin is biased in a way that heads prevails in 80% of the coin tosses. Betting solely on heads would yield an average total reward of about 80 for 100 bets. Betting solely on tails would yield an average total reward of about 20. But what about the random betting strategy? The following Python code simulates this case:

In [7]: ssp = [1, 1, 1, 1, 0] (1)

In [8]: asp = [1, 0] (2)

In [9]: def epoch():

tr = 0

for _ in range(100):

a = rng.choice(asp)

s = rng.choice(ssp)

if a == s:

tr += 1

return tr

In [10]: rl = np.array([epoch() for _ in range(250)])

rl[:10]

Out[10]: array([53, 56, 40, 55, 53, 49, 43, 45, 50, 51])

In [11]: rl.mean()

Out[11]: 49.924| 1 | The biased state space. |

| 2 | The same action space as before. |

Although the coin is now highly biased the average total reward of the random betting strategy is about the same as in the benchmark case. This might sound counterintuitive. However, the expected win rate is given by \(0.8 \cdot 0.5 + 0.2 \cdot 0.5 = 0.5\). In words, when betting on heads the win rate is 80% and when betting on tails it is 20%. Together, the total reward is as before on average. As a consequence, without learning, the agent is not able to capitalize on the bias.

A learning agent on the other hand can gain an edge by basing the betting strategy on the previous outcomes they observe. To this end, it is already enough to record all observed outcomes and to choose randomly from the set of all previous outcomes. In this case, the bias is reflected in the number of times the agent randomly bets on heads as compared to tails. The Python code that follows illustrates this simple learning strategy:

In [12]: ssp = [1, 1, 1, 1, 0]

In [13]: def epoch(n):

tr = 0

asp = [0, 1] (1)

for _ in range(n):

a = rng.choice(asp)

s = rng.choice(ssp)

if a == s:

tr += 1

asp.append(s) (2)

return tr

In [14]: rl = np.array([epoch(100) for _ in range(250)])

rl[:10]

Out[14]: array([71, 65, 67, 69, 68, 72, 68, 68, 77, 73])

In [15]: rl.mean()

Out[15]: 66.78| 1 | The initial action space. |

| 2 | The update of the action space with the observed outcome. |

With remembering and learning, the agent achieves an average total reward of about 66.8 — a significant improvement over the random strategy without learning. This is close to the expected value of \((0.8^2 + 0.2^2) \cdot 100 = 68\).

This strategy, while not optimal, is regularly observed in experiments involving human beings — and maybe somewhat surprisingly in animals as well. It is called probability matching.

|

Probability Matching

Koehler and James (2014) report results from studies analyzing probability matching, utility maximization, and other types of decision strategies.[1] The studies include a total of 1,557 university students.[2] The researchers find that probability matching is the most frequent strategy chosen or a close second to the utility maximizing strategy. The researchers also find that the utility maximizing strategy is chosen in general by the “most cognitively able participants”. They approximate cognitive ability through Scholastic Aptitude Test (SAT) scores, Mathematics Experience Composite scores, and the Number of University Statistics courses taken. As is often the case in decision-making, human beings might need formal training and experience to overcome urges and behaviors that feel natural to achieve optimal results. |

On the other hand, the agent can do better by simply betting on the most likely outcome as derived from past results. The following Python code implements this strategy.

In [16]: from collections import Counter

In [17]: ssp = [1, 1, 1, 1, 0]

In [18]: def epoch(n):

tr = 0

asp = [0, 1] (1)

for _ in range(n):

c = Counter(asp) (2)

a = c.most_common()[0][0] (3)

s = rng.choice(ssp)

if a == s:

tr += 1

asp.append(s) (4)

return tr

In [19]: rl = np.array([epoch(100) for _ in range(250)])

rl[:10]

Out[19]: array([81, 70, 74, 77, 82, 74, 81, 80, 77, 78])

In [20]: rl.mean()

Out[20]: 78.828| 1 | The initial action space. |

| 2 | The frequencies of the action space elements. |

| 3 | The action is chosen with the highest frequency. |

| 4 | The update of the action space with the observed outcome. |

In this case, the gambler achieves an average total reward of 78.5 which is close to the theoretical optimum of 80. In this context, this strategy seems to be the optimal one.

1.1.2. Rolling a Biased Die

As another example, consider a biased die. For this die, the probability for the outcome “4” shall be five times as likely as for any other number of the six-sided die. The following Python code simulates sequences of 600 bets on the outcome of the die, where a correct bet is rewarded with 1 USD and nothing otherwise.

In [21]: ssp = [1, 2, 3, 4, 4, 4, 4, 4, 5, 6] (1)

In [22]: asp = [1, 2, 3, 4, 5, 6] (2)

In [23]: def epoch():

tr = 0

for _ in range(600):

a = rng.choice(asp)

s = rng.choice(ssp)

if a == s:

tr += 1

return tr

In [24]: rl = np.array([epoch() for _ in range(250)])

rl[:10]

Out[24]: array([ 92, 96, 106, 99, 96, 107, 101, 106, 92, 117])

In [25]: rl.mean()

Out[25]: 101.22| 1 | The biased state space. |

| 2 | The uninformed action space. |

Without learning, the random betting strategy yields an average total reward of about 100. With perfect information about the biased die, the agent could expect an average total reward of about 300 because it would win about 50% of the 600 bets.

With probability matching, the agent will not achieve a perfect outcome — as was the case with the biased coin. However, the agent can improve the average total reward by more than 75% as the following Python code shows:

In [26]: def epoch():

tr = 0

asp = [1, 2, 3, 4, 5, 6] (1)

for _ in range(600):

a = rng.choice(asp)

s = rng.choice(ssp)

if a == s:

tr += 1

asp.append(s) (2)

return tr

In [27]: rl = np.array([epoch() for _ in range(250)])

rl[:10]

Out[27]: array([182, 174, 162, 157, 184, 167, 190, 208, 171, 153])

In [28]: rl.mean()

Out[28]: 176.296| 1 | The initial action space. |

| 2 | The update of the action space. |

The average total reward increases to about 177, which is not that far from the expected value of that strategy of \((0.5^2 + 0.1^2 \cdot 5) \cdot 600 = 180\).

As with the biased coin tossing game, the agent again can do better by simply choosing the action with the highest frequency in the updated action space, as the following Python code confirms. The average total reward of 299 is pretty close to the theoretical maximum of 300:

In [29]: def epoch():

tr = 0

asp = [1, 2, 3, 4, 5, 6] (1)

for _ in range(600):

c = Counter(asp) (2)

a = c.most_common()[0][0] (3)

s = rng.choice(ssp)

if a == s:

tr += 1

asp.append(s) (4)

return tr

In [30]: rl = np.array([epoch() for _ in range(250)])

rl[:10]

Out[30]: array([305, 288, 312, 306, 318, 302, 304, 311, 313, 281])

In [31]: rl.mean()

Out[31]: 297.204| 1 | The initial action space. |

| 2 | The frequencies of the action space elements. |

| 3 | The action is chosen with the highest frequency. |

| 4 | The update of the action space with the observed outcome. |

1.1.3. Bayesian Updating

The Python code and simulation approach in the previous sub-sections make for a simple way to implement the learning of an agent through playing a potentially biased game. In other words, by interacting with the betting environment, the agent can update their estimates for the relevant probabilities.

The procedure can therefore be interpreted as Bayesian updating of probabilities — to find out, for example, the bias of a coin.[3] The following discussion illustrates this insight based on the coin-tossing game.

Assume that the probability for heads (h) is \(P(h) = \alpha\) and that the probability for tails (t) accordingly is \(P(t) = 1 - \alpha\). The coin flips are assumed to be identically and independently distributed (i.i.d) according to the binomial distribution. Assume that an experiment yields \(f_h\) times heads and \(f_t\) times tails. Furthermore, assume that the binomial coefficient is given by:

In that case, we get \(P(E | \alpha) = B \cdot \alpha^{f_h} \cdot (1 - \alpha)^{f_t}\) as the probability that the experiment yields the assumed observations. \(E\) represents the event that \(f_h\) times heads and \(f_t\) times tails is observed.

One approach to deriving an appropriate value for \(\alpha\) given the results from the experiment is maximum likelihood estimation (MLE). The goal of MLE is to find a value \(\alpha\) that maximizes \(P(E | \alpha)\). The problem to solve as follows:

With this, one derives the optimal estimator by taking the first derivative with respect to \(\alpha\) and setting it equal to zero:

Simple manipulations yield the following maximum likelihood estimator:

\(\alpha^{MLE}\) is the frequency of heads over the total number of flips in the experiment. This is what has been learned flip-by-flip through the simulation approach, that is, through an agent betting on the outcomes of coin flips one after the other and remembering previous outcomes.

In other words, the agent has implemented Bayesian updating incrementally and bet-by-bet to arrive, after enough bets, to a numerical estimator \(\hat{\alpha}\) close to \(\alpha^{MLE}\), that is, \(\hat{\alpha} \approx \alpha^{MLE}\).

1.2. Reinforcement Learning

Reinforcement learning (RL) is a type of machine learning (ML) algorithm that relies on the interaction of an agent with an environment. This aspect is similar to the agent playing a potentially biased game and learning about relevant probabilities. However, RL algorithms are more general and capable in that an agent can learn from high-dimensional input to accomplish complex tasks.

While the mode of learning, interaction or trial and error, differs from other ML methods, the goals are nevertheless the same. Mitchell (1997) defines ML as follows:

A computer program is said to learn from experience \(E\) with respect to some class of tasks \(T\) and performance measure \(P\), if its performance at tasks in \(T\), as measured by \(P\), improves with experience \(E\).

This section provides some general background to RL while the next chapter introduces more technical details. Sutton and Barto (2018) provide a comprehensive overview of RL approaches and algorithms. On a high level, they describe RL as follows:

Reinforcement learning is about learning from interaction how to behave in order to achieve a goal. The reinforcement learning agent and its environment interact over a sequence of discrete time steps.

|

Reinforcement Learning

Most books on ML focus on supervised and unsupervised learning algorithms, but RL is the learning approach that comes closest to how human beings and animals learn. Namely, through repeated interaction with their environment and receiving positive (reinforcing) or negative (punishing) feedback. Such a sequential approach is much closer to human learning than the simultaneous learning from a generally very large number of labeled or unlabeled examples. |

1.2.1. Major Breakthroughs

In artificial intelligence (AI) research and practice, two types of algorithms have seen a meteoric rise over the last ten years: deep neural networks (DNNs) and reinforcement learning (RL).[4] While DNNs have seen their own success stories in many different application areas, they also play an integral role in modern RL algorithms, such as Q-learning.[5]

The book by Gerrish (2018) recounts several major success stories — and sometimes also failures — of AI over recent decades. In almost all of them, DNNs play a central role and RL algorithms sometimes are also a core part of the story. Among those successes are playing Atari 2600 games, chess, and Go on superhuman levels. These are discussed in what follows.

Concerning RL, and Q-learning in particular, the company DeepMind has achieved several noteworthy breakthroughs. In Mnih et al. (2013) and Mnih et al. (2015), the company reports how a so-called deep Q-learning (DQL) agent can learn to play Atari 2600 console[6] games on a superhuman level through interacting with a game-playing API. Bellemare et al. (2013) provide an overview of this popular API for the training of RL agents.

While mastering Atari games is impressive for an RL agent, and was celebrated by the AI researcher and retro gamer communities alike, the breakthrough concerning popular board games, such as Go and chess, gained the highest public attention and admiration.

In 2014, researcher and philosopher Nick Bostrom predicted in his popular book Superintelligence that it might take another 10 years for AI researchers to come up with an AI agent that plays the game of Go on a superhuman level:

Go-playing programs have been improving at a rate of about 1 dan/year in recent years. If this rate of improvement continues, they might beat the human world champion in about a decade.

However, DeepMind researchers were able to successfully leverage the DQL techniques developed for playing Atari games and to come up with a DQL agent, called AlphaGo, that first beat the European champion in Go in 2015 and later in early 2016 even the world champion.[7] The details are documented in Silver et al. (2017). They summarize:

A long-standing goal of artificial intelligence is an algorithm that learns, tabula rasa, superhuman proficiency in challenging domains. Recently, AlphaGo became the first program to defeat a world champion in the game of Go. The tree search in AlphaGo evaluated positions and selected moves using deep neural networks. These neural networks were trained by supervised learning from human expert moves, and by reinforcement learning from self-play.

DeepMind was able to generalize the approach of AlphaGo, which primarily relies on DQL agents playing a large number of games against themselves (“self-playing”), to the board games of chess and shogi. DeepMind calls this generalized agent AlphaZero. What is most impressive about AlphaZero is that only a few hours of self-playing chess for training are enough to reach not only a superhuman level but also a level well above any other computer engine, such as Stockfish. The paper by Silver et al. (2018) provides the details and summarizes:

In this paper, we generalize this approach into a single AlphaZero algorithm that can achieve superhuman performance in many challenging games. Starting from random play and given no domain knowledge except the game rules, AlphaZero convincingly defeated a world champion program in the games of chess and shogi (Japanese chess), as well as Go.

The paper also provides the following training times:

Training lasted for approximately 9 hours in chess, 12 hours in shogi, and 13 days in Go …

The dominance of AlphaZero over Stockfish in chess is not only remarkable given the short training time. It is also remarkable because AlphaZero only evaluates a much lower number of positions per second:

AlphaZero searches just 60,000 positions per second in chess and shogi, compared with 60 million for Stockfish …

One is inclined to attribute this to some form of acquired tactical and strategic intelligence on the side of AlphaZero as compared to predominantly brute force computation on the side of Stockfish.

|

Reinforcement and Deep Learning

The breakthroughs in AI outlined in this sub-section rely on a combination of RL and DL. While DL can be applied in many scenarios, such as standard supervised and unsupervised learning situations, without RL, RL is in general today exclusively applied with the help of DL and DNNs. |

1.2.2. Major Building Blocks

It is not that simple to exactly pin down why DQL algorithms are so successful in many domains that were obviously so hard to crack by computer scientists and AI researchers for decades. However, it is relatively straightforward to describe the major building blocks of an RL and DQL algorithm.

It generally starts with an environment. This can be an API to play Atari games, an environment to play chess, or an environment for navigating a map indoors or outdoors. Nowadays, there are many such environments available to get started with RL efficiently. One of the most popular ones is the Gymnasium environment.[8] On the Github page you read:

Gymnasium is an open source Python library for developing and comparing reinforcement learning algorithms by providing a standard API to communicate between learning algorithms and environments, as well as a standard set of environments compliant with that API.

At any given point, an environment is characterized by a state. The state summarizes all the relevant, and sometimes also irrelevant, information for an agent to receive as input when interacting with an environment. Concerning chess, a board position with all relevant pieces represents such a state. Sometimes additional input is required like, for example, whether castling has happened or not. For an Atari game, the pixels of the screen and the current score could represent the state of the environment.

The agent in this context subsumes all elements of the RL algorithm that interact with the environment and that learn from these interactions. In an Atari games context, the agent might represent a player playing the game. In the context of chess, it can be either the player playing the white or black pieces.

An agent can choose one action from an often finite set of allowed actions. In an Atari game, movements to the left or right might be allowed actions. In chess, the rule set specifies both the number of allowed actions and their types.

Given the action of an agent, the state of the environment is updated. One such update is generally called a step. The concept of a step is general enough to encompass both heterogeneous and homogeneous time intervals between two steps. Whereas in Atari games, for example, real-time interaction with the game environment is simulated by rather short, homogeneous time intervals (“game clock”), chess players have quite some flexibility with regard to how long it takes them to make the next move (take the next action).

Depending on the action an agent chooses, a reward or penalty is awarded. For an Atari game, points are a typical reward. In chess, it is often a bit more subtle in that an evaluation of the current board position must take place. Improvements in the evaluation then represent a reward while a worsening of the evaluation represents a penalty.

In RL, an agent is assumed to maximize an objective function. For Atari games, this can simply be the score achieved, that is, the sum of points collected during gameplay. In other words, it is a hunt for new “high scores”. In playing chess, it is to set the opponent checkmate as represented by, say, an infinite evaluation score of the board position.

The policy defines which action an agent takes given a certain state of the environment. This is done by assigning values — technically, floating point numbers — to all possible combinations of states and actions. An optimal action is then chosen by looking up the highest value possible for the current state and the set of possible actions. Given a certain state in an Atari game, represented by all the pixels that make up the current scene, the policy might specify that the agent chooses “move right” as the optimal action. In chess, given a specific board position, the policy might specify to move the white king from c1 to b1.

An episode is a collection of steps from the initial state of the environment until success is achieved or failure is observed. In an Atari game, this means from the start of the game until the agent has lost all “lives” — or has maybe achieved a final goal of the game. In chess, an episode represents a full game until a win, loss, or draw.

In summary, RL algorithms are characterized by the following building blocks:

-

Environment

-

State

-

Agent

-

Action

-

Step

-

Reward

-

Objective

-

Policy

-

Episode

|

Modeling Environments

The famous quote “Things should be as simple as possible, but no simpler”, usually attributed to Albert Einstein, can serve as a guideline for the design of environments and their APIs for reinforcement learning. Like in the context of a scientific model, an environment should capture all relevant aspects of the phenomena to be covered by it and dismiss those that are irrelevant. Sometimes, tremendous simplifications can be made based on this approach. At other times, an environment must represent the complete problem at hand. For example, when playing chess the complete board position with all the pieces is relevant. |

1.3. Deep Q-Learning

What characterizes so-called deep Q-learning (DQL) algorithms? To begin with, QL is a special form of RL. In that sense, all the major building blocks of RL algorithms apply to QL algorithms as well. There are two specific characteristics of DQL algorithms.

First, DQL algorithms evaluate both the immediate reward of an agent’s action and the delayed reward of the action. The delayed reward is estimated through an evaluation of the state that unfolds when the action is taken. The evaluation of the unfolding state is done under the assumption that all actions going forward are chosen optimally.

In chess, it is obvious that it is by far not sufficient to evaluate the very next move. It is rather necessary to look a few moves ahead and to evaluate different alternatives that can ensue. A chess novice has a hard time, in general, looking just two or three moves ahead. A chess grandmaster on the other hand can look as far as 20 to 30 moves ahead, as some argue.[9]

Second, DQL algorithms use DNNs to approximate, learn, and update the optimal policy. For most interesting environments in RL, the mapping of states and possible actions to values is too complex to be modeled explicitly, say, through a table or a mathematical function. DNNs are known to have excellent approximation capabilities and provide all the flexibility needed to accommodate almost any type of state that an environment might communicate to the DQL agent.

Considering again chess as an example, it is estimated that there are more than \(10^{100}\) possible moves, with illegal moves included. This compares to \(10^{80}\) as an estimate for the number of atoms in the universe. With legal moves only, there are about \(10^{40}\) possible moves, which is still a pretty large number:

In [32]: cm = 10 ** 40

print(f'{cm:,}')

10,000,000,000,000,000,000,000,000,000,000,000,000,000This shows that only an approximation of the optimal policy is feasible in almost all interesting RL cases.

1.4. Conclusions

This chapter focuses on learning through interaction with an environment. It is a natural phenomenon, observed in human beings and animals alike. Simple examples show how an agent can learn probabilities through repeatedly betting on the outcome of a gamble and thereby implementing Bayesian updating. For this book, RL algorithms are the most important ones. Breakthroughs related to RL and the building blocks of RL are discussed. DQL, as a special RL algorithm, is characterized by taking into account not only immediate rewards but also delayed rewards from taking an action. In addition, the optimal policy is generally approximated by DNNs. Later chapters cover the DQL algorithm in much more detail and use it extensively.

1.5. References

Articles and books cited in this chapter:

-

Bayes, Thomas and Richard Price (1763): “An Essay towards Solving a Problem in the Doctrine of Chances. By the Late Rev. Mr. Bayes, F.R.S. Communicated by Mr. Price, in a Letter to John Canton, A.M. F.R.S” Philosophical Transactions of the Royal Society of London, Vol. 53, 370–418.

-

Bellemare, Marc et al. (2013): “The Arcade Learning Environment: An Evaluation Platform for General Agents.” Journal of Artificial Intelligence Research, Vol. 47, 253–279.

-

Bostrom, Nick (2014): Superintelligence—Paths, Dangers, Strategies. Oxford University Press, Oxford.

-

Downey, Allen (2021): Think Bayes. 2nd. ed., O’Reilly Media, Sebastopol.

-

Gerrish, Sean (2018): How Smart Machines Think. MIT Press, Cambridge.

-

Goodfellow, Ian, Yoshua Bengio, and Aaron Courville (2016): Deep Learning. MIT Press, Cambridge, http://deeplearningbook.org.

-

Hanel, Paul, Katia Vione (2016): “Do Student Samples Provide an Accurate Estimate of the General Public?” PLoS ONE, Vol. 11, No. 12.

-

Mitchell, Tom (1997): Machine Learning. McGraw-Hill, New York.

-

Mnih, Volodymyr et al. (2013): “Playing Atari with Deep Reinforcement Learning” https://doi.org/10.48550/arXiv.1312.5602.

-

Mnih, Volodymyr et al. (2015): “Human-Level Control through Deep Reinforcement Learning” Nature, Vol. 518, 529–533.

-

Rachev, Svetlozar et al. (2008): Bayesian Methods in Finance. John Wiley & Sons, Hoboken.

-

Silver, David et al. (2017): “Mastering the Game of Go without Human Knowledge.” Nature, Vol. 550, 354–359.

-

Silver, David et al. (2018): “A General Reinforcement Learning Algorithm that Masters Chess, Shogi and Go through Self-Play”. Science, Vol. 362, Issue 6419, 1140-1144.

-

Sutton, Richard and Andrew Barto (2018): Reinforcement Learning: An Introduction. 2nd ed., The MIT Press, Cambridge and London.

-

Watkins, Christopher (1989): Learning from Delayed Rewards. Ph.D. thesis, University of Cambridge.

-

Watkins, Christopher and Peter Dayan (1992): “Q-Learning.” Machine Learning, Vol. 8, 279-282.

-

West, Richard and Keith Stanovich (2003): “Is Probability Matching Smart? Associations Between Probabilistic Choices and Cognitive Ability.” Memory & Cognition, Vol. 31, No. 2, 243-251.

2. Deep Q-Learning

Like a human, our agents learn for themselves to achieve successful strategies that lead to the greatest long-term rewards. This paradigm of learning by trial-and-error, solely from rewards or punishments, is known as reinforcement learning (RL).[10]

The previous chapter introduces deep Q-learning (DQL) as a major algorithm in artificial intelligence (AI) to learn through interaction with an environment. This chapter provides some more details about the DQL algorithm. It uses the CartPole environment from the gymnasium Python package to illustrate the API-based interaction with gaming environments. It also implements a DQL agent as a self-contained Python class that serves as a blueprint for later DQL agents applied to financial environments.

However, before the focus is turned on DQL the chapter discusses general decision problems in economics and finance. Dynamic programming is introduced as a solution mechanism for dynamic decision problems. This provides the background for the application of DQL algorithms because they can be considered to lead to approximate solutions to dynamic programming problems.

Decision Problems classifies decision problems in economics and finance according to different characteristics. Dynamic Programming focuses on a special type of decision problem, so-called finite horizon Markovian dynamic programming problems. Q-Learning outlines the major elements of Q-learning and explains the role of deep neural networks in this context. Finally, CartPole as an Example illustrates a DQL setup by the use of the CartPole game API and a DQL agent implemented as a Python class.

2.1. Decision Problems

In economics and finance, optimization and associated techniques play a central role. One could almost say that finance is nothing else than the systematic application of optimization techniques to problems arising in a financial context. Different types of optimization problems can be distinguished in finance. The major differentiating criteria as follows:

- Discrete vs. continuous action space

-

The quantities or actions to be chosen through optimization can be from a set of finite, discrete options (optimal choice) or from a set of infinite, continuous options (optimal control).

- Static vs. dynamic problems

-

Some problems are one-off optimization problems — these are generally called static problems. Other problems are characterized by a typically large number of sequential and connected optimization problems over time — these are called dynamic problems.

- Finite vs. infinite horizon

-

Dynamic optimization problems can have a finite or infinite horizon. Playing a game of chess generally has a finite horizon.[11] Estate planning for multiple generations of a family can be seen as a decision problem with an infinite horizon. Climate policy might be another one.

- Discrete vs. continuous time

-

Some dynamic problems only require discrete decisions and optimizations at different points in time. Chess playing is again a good example. Other dynamic problems require continuous decisions and optimizations. Driving a car or flying an airplane are examples for when a driver or pilot needs to continuously make sure that appropriate decisions are made.

Given the examples discussed in Learning through Interaction, betting on the outcome of a biased coin is a static problem with discrete action space. Although such a bet can be repeated multiple times, the optimal betting strategy is independent of the previous bet as well as of the next bet. On the other hand, playing a game of chess is a dynamic problem — with a finite horizon — because a player needs to make a sequence of optimal decisions that are all dependent on each other. The current board position depends on the player’s (and the opponent’s) previous moves. The future move options (action space) depend on the current move the player chooses.

In summary, because the action space is finite in both cases, coin betting is a discrete, static optimization problem, whereas playing chess is a discrete, dynamic optimization problem with finite horizon.

2.2. Dynamic Programming

An important type of dynamic optimization problem is the finite horizon Markovian dynamic programming problem (FHMDP). An FHMDP can formally be described by the following tuple[12]:

\(S\) is the state space of the problem with generic element \(s\). \(A\) is the action space of the problem with generic element \(a\). \(T\) is a positive integer and represents the finite horizon of the problem.

For each point in time, at which an action is to be chosen, \(t \in \{0, 1, \dots , T\}\), there are two relevant functions and one relevant correspondence. The reward function maps a state and an action to a real-valued reward. If an agent at time \(t\) chooses action \(a_t\) in state \(s_t\) they receive a reward of \(r_t\):

The transition function maps a state and an action to another state. This function models the step from state \(s_t\) to state \(s_{t+1}\) when action \(a_t\) is taken:

Finally, the feasible action correspondence maps states to feasible actions. Given a state \(s_t\), the correspondence defines all feasible actions \(\{a_t^1, a_t^2, \dots \}\) for that state:

The objective of an agent is to choose a plan for taking actions at each point in time to maximize the sum of the per-period rewards over the horizon of the model. In other words, an agent needs to solve the following optimization problem:

subject to

What does Markovian mean in this context? It means that the transition function only depends on the current state and the current action taken and not on the full history of all states and actions. Formally, the following equality holds.

In this context, one also needs to distinguish between FHMDP problems for which the transition function is deterministic or stochastic. For chess, it is clear that the transition function is deterministic. On the other hand, typical computer games and all games offered in casinos generally have stochastic elements and as a consequence stochastic transition functions. If the transition function is stochastic, one usually speaks of stochastic dynamic programming.

A Markovian policy \(\sigma\) is a contingency plan that specifies which action \(a\) is to be taken if state \(s\) is observed. For an FHMDP, this implies \(\sigma: S \rightarrow A\) with \(\sigma_t(s_t) \in \Phi_t(s_t)\). This gives the set of all feasible policies, \(\sigma \in \Sigma\).

The total reward of a feasible policy \(\sigma\) is denoted by

The value function \(V: S \rightarrow \mathbb{R}\) is then defined by the supremum of the total reward over all feasible policies:

For an optimal policy \(\sigma^*\), the following must hold:

The problem of an agent faced with an FHMDP can therefore also be interpreted as finding an optimal policy with the above characteristics. If an optimal strategy \(\sigma^*\) exists, it can be shown that the value function, in general, satisfies the so-called Bellmann equation:

In other words, a dynamic decision problem involving simultaneous optimization over a combination of a potentially infinitely large number of feasible actions can be decomposed into a sequence of static, single-step optimization problems. Duffie (1988, 182), for example, summarizes:

In multi-period optimization problems, the problem of selecting actions over all periods can be decomposed into a family of single-period problems. In each period, one merely chooses an action maximizing the sum of the reward for that period and the value of beginning the problem again in the following period.

In classical and modern economic and financial theory, a large number of FHMDP problems can be found, such as:

-

Optimal growth over time,

-

Optimal consumption-saving over time,

-

Optimal portfolio allocation over time,

-

Dynamic hedging of options and derivatives, or

-

Optimal execution strategies in algorithmic trading.

Generally, these problems need to be modeled as FHMDP problems with stochastic transition functions. This is because most financial quantities, such as commodity prices, interest rates, stock prices, are uncertain and stochastic.

In particular, when dynamic programming involves continuous time modeling and stochastic transition functions — as is often the case in economics and finance — the mathematical requirements are pretty high. They involve, among other things, analysis on metric spaces, measure-theoretic probability, and stochastic calculus. For an introduction to stochastic dynamic programming in Markovian financial models, refer to the book by Duffie (1988) for the discrete time case and Duffie (2001) for the continuous time case. For a comprehensive review of the required mathematical techniques in deterministic and stochastic dynamic programming and many economic examples, see the book by Stucharski (2009). The book by Sargent and Stucharski (2023) also covers dynamic programming and is accompanied by both Julia and Python code examples.

2.3. Q-Learning

Even with the most sophisticated mathematical techniques, many interesting FHMDPs in economics, finance, and other fields defy analytical solutions. In such cases, numerical methods that can approximate optimal solutions are usually the only feasible choice. Among these numerical methods is Q-learning (QL) as a major reinforcement learning (RL) technique (see also Deep Q-Learning).

Watkins (1989) and Watkins and Dayan (1992) are pioneering works about modern QL. At the beginning of his Ph.D. thesis, Watkins (1989) writes:

This thesis will present a general computational approach to learning from rewards and punishments, which may be applied to a wide range of situations in which animal learning has been studied, as well as to many other types of learning problems.

In Watkins and Dayan (1992), the authors describe the algorithm as follows:

Q-learning (Watkins, 1989) is a form of model-free reinforcement learning. It can also be viewed as a method of asynchronous dynamic programming (DP). It provides agents with the capability of learning to act optimally in Markovian domains by experiencing the consequences of actions, without requiring them to build maps of the domains. …

[A]n agent tries an action at a particular state, and evaluates its consequences in terms of the immediate reward or penalty it receives and its estimate of the value of the state to which it is taken. By trying all actions in all states repeatedly, it learns which are best overall, judged by long-term discounted reward. Q-learning is a primitive (Watkins, 1989) form of learning, but, as such, it can operate as the basis of far more sophisticated devices.

Consider an FHMDP as in the previous section.

In this context, the \(Q\) in QL stands for an action policy that assigns to each state \(s_t \in S\) and feasible action \(a_t \in A\) a numerical value. The numerical value is composed of the immediate reward of taking action \(a_t\) and the discounted delayed reward —- given an optimal action \(a_{t+1}^*\) taken in the subsequent state. Formally, this can be written as (note the resemblance with the reward function):

Then, with \(\gamma \in (0, 1]\) being a discount factor, \(Q\) takes on the following functional form:

In general, the optimal action policy \(Q\) cannot be specified in analytical form, that is, in the form of a table or mathematical function. Therefore, QL relies in general on approximate representations for the optimal policy \(Q\).

If a deep neural network (DNN) is used for the representation, one usually speaks of deep Q-learning (DQL). To some extent, the use of DNNs in DQL might seem somewhat arbitrary. However, there are strong mathematical results — for example, the universal approximation theorem — that show the powerful approximation capabilities of DNNs. Wikipedia summarizes in this context:

In the mathematical theory of artificial neural networks, the universal approximation theorem states that a feed-forward network with a single hidden layer containing a finite number of neurons can approximate continuous functions … The theorem thus states that simple neural networks can represent a wide variety of interesting functions when given appropriate parameters; however, it does not touch upon the algorithmic learnability of those parameters.

As in RL in general, QL is based on an agent interacting with an environment and learning from the ensuing experiences through rewards and penalties. A QL agent takes actions based on two different principles:

- Exploitation

-

This refers to actions taken by the QL agent under the current optimal policy \(Q\).

- Exploration

-

This refers to actions taken by a QL agent that are random. The purpose is to explore random actions and their associated values beyond what the current optimal policy would dictate.

Usually, the QL agent is supposed to follow an \(\epsilon-\)greedy strategy. In this regard, the parameter \(\epsilon\) defines the ratio with which the agent relies on exploration as compared to exploitation. During the training of the QL agent, \(\epsilon\) is generally assumed to decrease with an increasing number of training units.

In DQL, the policy \(Q\) — that is, the DNN — is regularly updated through what is called replay. For replay, the agent must store passed experiences (states, actions, rewards, next states, etc.) and use, in general relatively small, batches from the memorized experiences to re-train the DNN. In the limit — that is the idea and “hope” — the DNN approximates the optimal policy for the problem well enough. In most cases, an optimal policy is not achievable at all since the problem at hand is simply too complex — such as chess is with its \(10^{40}\) possible moves.

|

DNNs for Approximation

The usage of DNNs in Q-learning agents is not arbitrary. The representation (approximation) of the optimal action policy \(Q\) generally is a demanding task. DNNs have powerful approximation capabilities which explains their regular usage as the “brain” for a Q-learning agent. |

2.4. CartPole as an Example

The gymnasium package for Python provides several environments (APIs) that are suited for RL agents to be trained. CartPole is a relatively simple game that requires an agent to balance a pole on a cart by pushing the cart to the left or right. This section illustrates the API for the game, that is the environment, and shows how to implement a DQL agent in Python that can learn to play the game perfectly.

2.4.1. The Game Environment

The gymnasium package is installed as follows:

pip install gymnasium

Details about the CartPole game are found at https://gymnasium.farama.org. The first step is the creation of an environment object:

In [1]: import gymnasium as gym

In [2]: env = gym.make('CartPole-v1')This object allows the interaction via simple method calls. For example, it allows us to see how many actions are feasible (action space), to sample random actions, or to get more information about the state description (observation space):

In [3]: env.action_space

Out[3]: Discrete(2)

In [4]: env.action_space.n (1)

Out[4]: 2

In [5]: [env.action_space.sample() for _ in range(10)] (1)

Out[5]: [1, 0, 1, 0, 0, 0, 0, 0, 0, 0]

In [6]: env.observation_space

Out[6]: Box([-4.8000002e+00 -3.4028235e+38 -4.1887903e-01 -3.4028235e+38],

[4.8000002e+00 3.4028235e+38 4.1887903e-01 3.4028235e+38], (4,),

float32)

In [7]: env.observation_space.shape (2)

Out[7]: (4,)| 1 | Two actions, 0 and 1, are possible. |

| 2 | The state is described by four parameters. |

The environment allows an agent to take one of two actions:

-

0: Push the cart to the left. -

1: Push the cart to the right.

The environment models the state of the game through four physical parameters:

-

Cart position

-

Cart velocity

-

Pole angle

-

Pole angular velocity

CartPole game shows a visual representation of a state of the CartPole game.

To play the game, the environment is first reset, leading by default to a randomized initial state. Every action steps the environment forward to the next state:

In [8]: env.reset(seed=100) (1)

# cart position, cart velocity, pole angle, pole angular velocity

Out[8]: (array([ 0.03349816, 0.0096554 , -0.02111368, -0.04570484],

dtype=float32),

{})

In [9]: env.step(0) (2)

Out[9]: (array([ 0.03369127, -0.18515752, -0.02202777, 0.24024247],

dtype=float32),

1.0,

False,

False,

{})

In [10]: env.step(1) (2)

Out[10]: (array([ 0.02998812, 0.01027205, -0.01722292, -0.05930644],

dtype=float32),

1.0,

False,

False,

{})| 1 | Resets the environment, using a seed value for the random number generator. |

| 2 | Steps the environment one step forward by taking one of two actions. |

The returned tuple contains the following data:

-

New state

-

Reward

-

Terminated

-

Truncated

-

Additional data

The game can be played until True is returned for “terminated”. For every step, the agent receives a reward of 1. The more steps, the higher the total reward. The objective of an RL agent is to maximize the total reward or to achieve a minimum reward, for example.

2.4.2. A Random Agent

It is straightforward to implement an agent that only takes random actions. It cannot be expected that the agent will achieve a high total reward on average. However, every once in a while such an agent might be lucky.

The following Python code implements a random agent and collects the results from a larger number of games played:

In [11]: class RandomAgent:

def __init__(self):

self.env = gym.make('CartPole-v1')

def play(self, episodes=1):

self.trewards = list()

for e in range(episodes):

self.env.reset()

for step in range(1, 100):

a = self.env.action_space.sample()

state, reward, done, trunc, info = self.env.step(a)

if done:

self.trewards.append(step)

break

In [12]: ra = RandomAgent()

In [13]: ra.play(15)

In [14]: ra.trewards

Out[14]: [18, 28, 17, 25, 16, 41, 21, 19, 22, 9, 11, 13, 15, 14, 11]

In [15]: round(sum(ra.trewards) / len(ra.trewards), 2) (1)

Out[15]: 18.67| 1 | Average reward for the random agent. |

The results illustrate that the random agent does not survive that long. The total reward might be somewhat above 20 or below. In rare cases, a relatively high total reward — for example, close to 50 — might be observed (called a lucky punch).

2.4.3. The DQL Agent

This sub-section implements a DQL agent in multiple steps. This allows for a more detailed discussion of the single elements that make up the agent. Such an approach seems justified because this DQL agent will serve as a blueprint for the DQL agent that will be applied to financial problems.

To get started, the following Python code first does all the required imports and customizes TensorFlow.

In [16]: import os

import random

import warnings

import numpy as np

import tensorflow as tf

from tensorflow import keras

from collections import deque

from keras.layers import Dense

from keras.models import Sequential

In [17]: warnings.simplefilter('ignore')

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

os.environ['PYTHONHASHSEED'] = '0'

In [18]: from tensorflow.python.framework.ops import disable_eager_execution

disable_eager_execution() (1)

In [19]: opt = keras.optimizers.legacy.Adam(learning_rate=0.0001) (2)

In [20]: random.seed(100)

tf.random.set_seed(100)| 1 | Speeds up the training of the neural network. |

| 2 | Defines the optimizer to be used for the training. |

The following Python code shows the initial part of the DQLAgent class. Among other things, it defines the major parameters and instantiates the DNN that is used for representing the optimal action policy.

In [21]: class DQLAgent:

def __init__(self):

self.epsilon = 1.0 (1)

self.epsilon_decay = 0.9975 (2)

self.epsilon_min = 0.1 (3)

self.memory = deque(maxlen=2000) (4)

self.batch_size = 32 (5)

self.gamma = 0.9 (6)

self.trewards = list() (7)

self.max_treward = 0 (8)

self._create_model() (9)

self.env = gym.make('CartPole-v1') (10)

def _create_model(self):

self.model = Sequential()

self.model.add(Dense(24, activation='relu', input_dim=4))

self.model.add(Dense(24, activation='relu'))

self.model.add(Dense(2, activation='linear'))

self.model.compile(loss='mse', optimizer=opt)| 1 | The initial ratio epsilon with which exploration is implemented. |

| 2 | The factor by which epsilon is diminished. |

| 3 | The minimum value for epsilon. |

| 4 | The deque object which collects past experiences.[13] |

| 5 | The number of experiences used for replay. |

| 6 | The factor to discount future rewards. |

| 7 | A list object to collect total rewards. |

| 8 | A parameter to store the maximum total reward achieved. |

| 9 | Initiates the instantiation of the DNN. |

| 10 | Instantiates the CartPole environment. |

The next part of the DQLAgent class implements the .act() and .replay() methods for choosing an action and updating the DNN (optimal action policy) given past experiences.

In [22]: class DQLAgent(DQLAgent):

def act(self, state):

if random.random() < self.epsilon:

return self.env.action_space.sample() (1)

return np.argmax(self.model.predict(state)[0]) (2)

def replay(self):

batch = random.sample(self.memory, self.batch_size) (3)

for state, action, next_state, reward, done in batch:

if not done:

reward += self.gamma * np.amax(

self.model.predict(next_state)[0]) (4)

target = self.model.predict(state) (5)

target[0, action] = reward (6)

self.model.fit(state, target, epochs=2, verbose=False) (7)

if self.epsilon > self.epsilon_min:

self.epsilon *= self.epsilon_decay (8)| 1 | Chooses a random action. |

| 2 | Chooses an action according to the (current) optimal policy. |

| 3 | Randomly chooses a batch of past experiences for replay. |

| 4 | Combines the immediate and discounted future reward. |

| 5 | Generates the values for the state-action pairs. |

| 6 | Updates the value for the relevant state-action pair. |

| 7 | Trains/updates the DNN to account for the updated value. |

| 8 | Reduces epsilon by the epsilon_decay factor. |

The major elements are available to implement the core part of the DQLAgent class: the .learn() method which controls the interaction of the agent with the environment and the updating of the optimal policy. It also generates printed output to monitor the learning of the agent.

In [23]: class DQLAgent(DQLAgent):

def learn(self, episodes):

for e in range(1, episodes + 1):

state, _ = self.env.reset() (1)

state = np.reshape(state, [1, 4]) (2)

for f in range(1, 5000):

action = self.act(state) (3)

next_state, reward, done, trunc, _ = self.env.step(action) (4)

next_state = np.reshape(next_state, [1, 4]) (2)

self.memory.append(

[state, action, next_state, reward, done]) (4)

state = next_state (5)

if done or trunc:

self.trewards.append(f) (6)

self.max_treward = max(self.max_treward, f) (7)

templ = f'episode={e:4d} | treward={f:4d}'

templ += f' | max={self.max_treward:4d}'

print(templ, end='\r')

break

if len(self.memory) > self.batch_size:

self.replay() (8)

print()| 1 | The environment is reset. |

| 2 | The state object is reshaped.[14] |

| 3 | An action is chosen according to the .act() method, given the current state. |

| 4 | The relevant data points are collected for replay. |

| 5 | The state variable is updated to the current state. |

| 6 | Once terminated, the total reward is collected. |

| 7 | The maximum total reward is updated if necessary. |

| 8 | Replay is initiated as long as there are enough past experiences. |

With the following Python code, the class is complete. It implements the .test() method that allows the testing of the agent without exploration.

In [24]: class DQLAgent(DQLAgent):

def test(self, episodes):

for e in range(1, episodes + 1):

state, _ = self.env.reset()

state = np.reshape(state, [1, 4])

for f in range(1, 5001):

action = np.argmax(self.model.predict(state)[0]) (1)

state, reward, done, trunc, _ = self.env.step(action)

state = np.reshape(state, [1, 4])

if done or trunc:

print(f, end=' ')

break| 1 | For testing, only actions according to the optimal policy are chosen. |

The DQL agent in the form of the completed DQLAgent Python class can interact with the CartPole environment to improve its capabilities in playing the game — as measured by the rewards achieved.

In [25]: agent = DQLAgent()

In [26]: %time agent.learn(1500)

episode=1500 | treward= 224 | max= 500

CPU times: user 1min 52s, sys: 21.7 s, total: 2min 14s

Wall time: 1min 46s

In [27]: agent.epsilon

Out[27]: 0.09997053357470892

In [28]: agent.test(15)

500 373 326 500 348 303 500 330 392 304 250 389 249 204 500At first glance, it is clear that the DQL agent consistently outperforms the random agent by a large margin. Therefore, luck can’t be at work. On the other hand, without additional context, it is not clear whether the agent is a mediocre, good, or very good one.

In the documentation for the CartPole environment, you find that the threshold for total rewards is 475. This means that everything above 475 is considered to be good. By default, the environment is truncated at 500 meaning that reaching that level is considered to be a “success” for the game. However, the game can be played beyond 500 steps/rewards which might make the training of the DQL agent more efficient.

2.5. Q-Learning vs. Supervised Learning

At the core of DQL is a DNN that resembles those often used and seen in supervised learning. Against this background, what are the major differences between these two approaches in machine learning?

To get started, the objective of both approaches is different. Concerning DQL, the objective is to learn an optimal action policy that maximizes total reward (or minimizes total penalties, for example). On the other hand, supervised learning aims at learning a mapping between features and labels.

Secondly, in DQL the data is generated through interaction and in a sequential fashion. The sequence of the data in general matters, like the sequence of moves in chess matter. In supervised learning, the data set is generally given upfront in the form of (expert-)labeled data sets and the sequence often does not matter at all. Supervised learning, in that sense, is based on a given set of correct examples while DQL needs to generate appropriate data sets through interaction step-by-step.

Thirdly, in DQL feedback generally comes delayed given an action taken now. A DQL agent playing a game might not know until many steps later whether a current action is reward maximizing or not. The algorithm, however, makes sure that delayed feedback backpropagates in time through replay and updating of the DNN. In supervised learning, all relevant examples exist upfront and immediate feedback is available as to whether the algorithm gets the mapping between features and labels correct or not.

In summary, while DNNs might be at the core of both DQL and supervised learning they differ in fundamental ways in terms of their objective, the data they use, and the feedback their learning is based on.

2.6. Conclusions

Decision problems in economics and finance are manifold. One of the most important types is dynamic programming. This chapter classifies decision problems along the lines of different binary characteristics (such as discrete or continuous action space) and introduces dynamic programming as an important algorithm to solve dynamic decision problems in discrete time.

Deep Q-learning is formalized and illustrated based on a simple game — CartPole from the gymnasium Python environment. The major goals in this regard are to illustrate the API-based interaction with an environment suited for RL and the implementation of a DQL agent in the form of a self-contained Python class.

The next chapter develops a simple financial environment that mimics the behavior of the CartPole environment so that the DQL agent from this chapter can learn to play a financial prediction game.

2.7. References

Articles and books cited in this chapter:

-

Duffie, Darrell (1988): Security Markets: Stochastic Models. Academic Press, Boston.

-

Duffie, Darrell (2001): Dynamic Asset Pricing Theory. 3rd ed., Princeton University Press, Princeton.

-

Li, Yuxi (2018): “Deep Reinforcement Learning: An Overview.” https://doi.org/10.48550/arXiv.1701.07274.

-

Sargent, Thomas and John Stucharski (2023): Dynamic Programming. https://dp.quantecon.org/.

-

Stucharski, John (2009): Economic Dynamics—Theory and Computation. MIT Press, Cambridge and London.

-

Sundaram, Rangarajan (1996): A First Course in Optimization Theory. Cambridge University Press, Cambridge.

-

Watkins, Christopher (1989): Learning from Delayed Rewards. Ph.D. thesis, University of Cambridge.

-

Watkins, Christopher and Peter Dayan (1992): “Q-Learning.” Machine Learning, Vol. 8, 279-282.

3. Financial Q-Learning

Today’s algorithmic trading programs are relatively simple and make only limited use of AI. This is sure to change.

The previous chapter shows that a DQL agent can learn to play the game of CartPole quite well. What about financial applications? As this chapter shows, the agent can also learn to play a financial game that is about predicting the future movement in a financial market. To this end, this chapter implements a finance environment that mimics the behavior of the CartPole environment and trains the DQL agent from the previous chapter based on this finance environment.

This chapter is quite brief, but it illustrates an important point: with the appropriate environment, DQL can be applied to financial problems basically in the same way as it is applied to games and in other domains. Finance Environment develops step-by-step the Finance class that mimics the behavior of the CartPole class. DQL Agent slightly adjusts the DQLAgent class from CartPole as an Example. The adjustments are made to reflect the new context. The DQL agent can learn to predict future market movements with a significant margin over the baseline accuracy of 50%. Where the Analogy Fails finally discusses the major issues of the modeling approach and the Finance class when compared, for example, to a gaming environment such as the CartPole game.

3.1. Finance Environment

The idea for the finance environment to be implemented in the following is that of a prediction game. The environment uses static historical financial time series data to generate the states of the environment and the value to be predicted by the DQL agent. The state is given by four floating point numbers representing the four most recent data points in the time series — such as normalized price or return values. The value to be predicted is either 0 or 1. Here, 0 means that the financial time series value drops to a lower level (“market goes down”) and 1 means that the time series value rises to a higher level (“market goes up”).

To get started, the following Python class implements the behavior of the env.action_space object for the generation of random actions. The DQL agent relies on this capability in the context of exploration:

In [1]: import os

import random

In [2]: random.seed(100)

os.environ['PYTHONHASHSEED'] = '0'

In [3]: class ActionSpace:

def sample(self):

return random.randint(0, 1)

In [4]: action_space = ActionSpace()

In [5]: [action_space.sample() for _ in range(10)]

Out[5]: [0, 1, 1, 0, 1, 1, 1, 0, 0, 0]The Finance class, which is at the core of this chapter, implements the idea of the prediction game as described before. It starts with the definition of important parameters and objects:

In [6]: import numpy as np

import pandas as pd

In [7]: class Finance:

url = 'https://certificate.tpq.io/rl4finance.csv' (1)

def __init__(self, symbol, feature, min_accuracy=0.485, n_features=4):

self.symbol = symbol (2)

self.feature = feature (3)

self.n_features = n_features (4)

self.action_space = ActionSpace() (5)

self.min_accuracy = min_accuracy (6)

self._get_data() (7)

self._prepare_data() (8)

def _get_data(self):

self.raw = pd.read_csv(self.url,

index_col=0, parse_dates=True) (7)| 1 | The URL for the data set to be used (can be replaced). |

| 2 | The symbol for the time series to be used for the prediction game. |

| 3 | The type of feature to be used to define the state of the environment. |

| 4 | The number of feature values to be provided to the agent. |

| 5 | The ActionSpace object that is used for random action sampling. |

| 6 | The minimum prediction accuracy required for the agent to continue with the prediction game. |

| 7 | The retrieval of the financial time series data from the remote source. |

| 8 | The method call for the data preparation. |

The data set used in this class allows the selection of the following financial instruments:

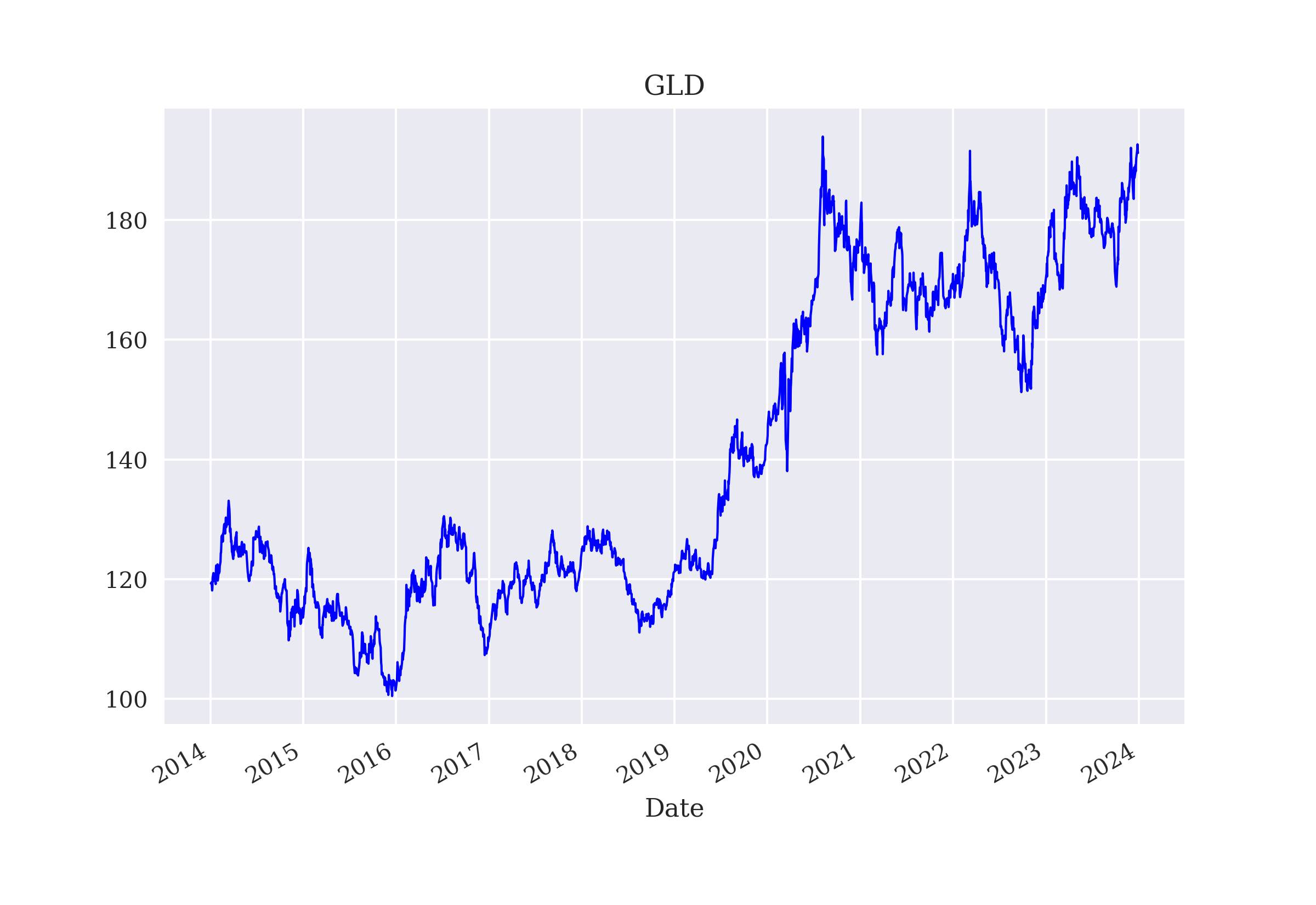

AAPL.O | Apple Stock MSFT.O | Microsoft Stock INTC.O | Intel Stock AMZN.O | Amazon Stock GS.N | Goldman Sachs Stock SPY | SPDR S&P 500 ETF Trust .SPX | S&P 500 Index .VIX | VIX Volatility Index EUR= | EUR/USD Exchange Rate XAU= | Gold Price GDX | VanEck Vectors Gold Miners ETF GLD | SPDR Gold Trust

A key method of the Finance class is the one for preparing the data for both the state description (features) and the prediction itself (labels). The state data is provided in normalized form, which is known to improve the performance of DNNs. From the implementation, it is obvious that the financial time series data is used in a static, non-random way. When the environment is reset to the initial state, it is always the same initial state.

In [8]: class Finance(Finance):

def _prepare_data(self):

self.data = pd.DataFrame(self.raw[self.symbol]).dropna() (1)

self.data['r'] = np.log(self.data / self.data.shift(1)) (2)

self.data['d'] = np.where(self.data['r'] > 0, 1, 0) (3)

self.data.dropna(inplace=True) (4)

self.data_ = (self.data - self.data.mean()) / self.data.std() (5)

def reset(self):

self.bar = self.n_features (6)

self.treward = 0 (7)

state = self.data_[self.feature].iloc[

self.bar - self.n_features:self.bar].values (8)

return state, {}| 1 | Selects the relevant time series data from the DataFrame object. |

| 2 | Generates a log return time series from the price time series. |

| 3 | Generates the binary, directional data to be predicted from the log returns. |

| 4 | Gets rid of all those rows in the DataFrame object that contain NaN (“not a number”) values. |

| 5 | Applies Gaussian normalization to the data. |

| 6 | Sets the current bar (position in the time series) to the value for the number of feature values. |

| 7 | Resets the total reward value to zero. |

| 8 | Generates the initial state object to be returned by the method. |

The following Python code finally implements the .step() method which moves the environment from one state to the next or signals that the game is terminated. One key idea is to check for the current prediction accuracy of the agent and to compare it to a minimum required accuracy. The purpose is to avoid that the agent simply plays along even if its current performance is much worse than, say, that of a random agent.

In [9]: class Finance(Finance):

def step(self, action):

if action == self.data['d'].iloc[self.bar]: (1)

correct = True

else:

correct = False

reward = 1 if correct else 0 (2)

self.treward += reward (3)

self.bar += 1 (4)

self.accuracy = self.treward / (self.bar - self.n_features) (5)

if self.bar >= len(self.data): (6)

done = True

elif reward == 1: (7)

done = False

elif (self.accuracy < self.min_accuracy) and (self.bar > 15): (8)

done = True

else:

done = False

next_state = self.data_[self.feature].iloc[

self.bar - self.n_features:self.bar].values (9)

return next_state, reward, done, False, {}| 1 | Checks whether the prediction (“action”) is correct. |

| 2 | Assigns a reward of +1 or 0 depending on correctness. |

| 3 | Increases the total reward accordingly. |

| 4 | The bar value is increased to move the environment forward on the time series. |

| 5 | The current accuracy is calculated. |

| 6 | Checks whether the end of the data set is reached. |

| 7 | Checks whether the prediction was correct. |

| 8 | Checks whether the current accuracy is above the minimum required accuracy. |

| 9 | Generates the next state object to be returned by the method. |

This completes the Finance class and allows the instantiation of objects based on the class as in the following Python code. The code also lists the available symbols in the financial data set used. It further illustrates that either normalized price or log returns data can be used to describe the state of the environment.

In [10]: fin = Finance(symbol='EUR=', feature='EUR=') (1)

In [11]: list(fin.raw.columns) (2)

Out[11]: ['AAPL.O',

'MSFT.O',

'INTC.O',

'AMZN.O',

'GS.N',

'.SPX',

'.VIX',

'SPY',

'EUR=',

'XAU=',

'GDX',

'GLD']

In [12]: fin.reset()

# four lagged, normalized price points

Out[12]: (array([2.74844931, 2.64643904, 2.69560062, 2.68085214]), {})

In [13]: fin.action_space.sample()

Out[13]: 1

In [14]: fin.step(fin.action_space.sample())

Out[14]: (array([2.64643904, 2.69560062, 2.68085214, 2.63046153]), 0, False,

False, {})

In [15]: fin = Finance('EUR=', 'r') (3)

In [16]: fin.reset()

# four lagged, normalized log returns

Out[16]: (array([-1.19130476, -1.21344494, 0.61099805, -0.16094865]), {})| 1 | Specifies the feature type to be normalized prices. |

| 2 | Shows the available symbols in the data set used. |

| 3 | Specifies the feature type to be normalized returns. |

To illustrate the interaction with the Finance environment, a random agent can again be considered. The total rewards that the agent achieves are, of course, quite low. They are slightly above 20 on average. This needs to be compared with the length of the data set which has more than 2,500 data points. In other words, a total reward of 2,500 or more is possible.

In [17]: class RandomAgent:

def __init__(self):

self.env = Finance('EUR=', 'r')

def play(self, episodes=1):

self.trewards = list()

for e in range(episodes):

self.env.reset()

for step in range(1, 100):

a = self.env.action_space.sample()

state, reward, done, trunc, info = self.env.step(a)

if done:

self.trewards.append(step)

break

In [18]: ra = RandomAgent()

In [19]: ra.play(15)

In [20]: ra.trewards

Out[20]: [17, 13, 17, 12, 12, 12, 13, 23, 31, 13, 12, 15]

In [21]: round(sum(ra.trewards) / len(ra.trewards), 2) (1)

Out[21]: 15.83

In [22]: len(fin.data) (2)

Out[22]: 2607| 1 | Average reward for the random agent. |

| 2 | Length of the data set which equals roughly the maximum total reward. |

3.2. DQL Agent

Equipped with the Finance environment, it is straightforward to let the DQL agent (DQLAgent class from The DQL Agent) play the financial prediction game.

The following Python code takes care of the required imports and configurations.

In [23]: import os

import random

import warnings

import numpy as np

import tensorflow as tf

from tensorflow import keras

from collections import deque

from keras.layers import Dense

from keras.models import Sequential

In [24]: warnings.simplefilter('ignore')

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

In [25]: from tensorflow.python.framework.ops import disable_eager_execution

disable_eager_execution()

In [26]: opt = keras.optimizers.legacy.Adam(learning_rate=0.0001)For the sake of completeness, the following code shows the DQLAgent class as a whole. It is basically the same code as in The DQL Agent with some minor adjustments for the context of this chapter.

In [27]: class DQLAgent:

def __init__(self, symbol, feature, min_accuracy, n_features=4):

self.epsilon = 1.0

self.epsilon_decay = 0.9975

self.epsilon_min = 0.1

self.memory = deque(maxlen=2000)

self.batch_size = 32

self.gamma = 0.5

self.trewards = list()

self.max_treward = 0

self.n_features = n_features

self._create_model()

self.env = Finance(symbol, feature,

min_accuracy, n_features) (1)

def _create_model(self):

self.model = Sequential()

self.model.add(Dense(24, activation='relu',

input_dim=self.n_features))

self.model.add(Dense(24, activation='relu'))

self.model.add(Dense(2, activation='linear'))

self.model.compile(loss='mse', optimizer=opt)